Server

Colocation

Server

Colocation

CDN

Network

CDN

Network

Linux Cloud

Hosting

Linux Cloud

Hosting

VMware Public

Cloud

VMware Public

Cloud

Multi-Cloud

Hosting

Multi-Cloud

Hosting

Cloud

Server Hosting

Cloud

Server Hosting

Kubernetes

Kubernetes

API Gateway

API Gateway

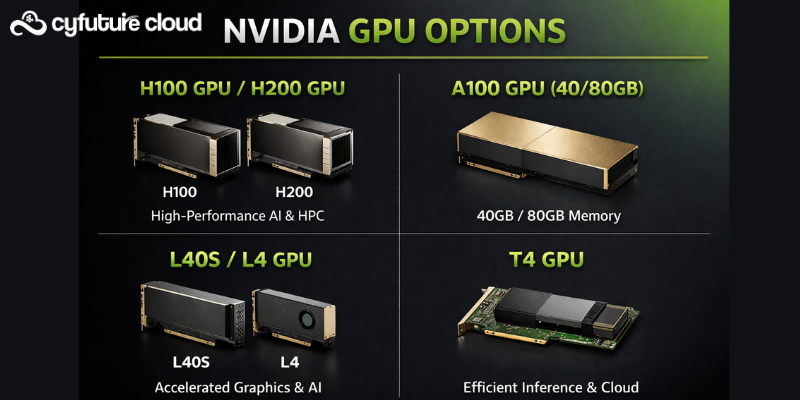

Cyfuture Cloud's GPU as a Service (GPUaaS) provides access to a range of high-performance NVIDIA, AMD, and Intel GPUs optimized for AI, machine learning, HPC, and rendering workloads. Key options include NVIDIA H100 GPU, A100 GPU, V100, T4, L40S, AMD MI300X, and Intel GAUDI 2, available in flexible configurations from single instances to multi-GPU clusters.

NVIDIA dominates Cyfuture Cloud's GPUaaS lineup, catering to diverse needs from inference to large-scale training. The H100 and H200 GPU lead for cutting-edge AI, offering massive FP8 performance and high memory bandwidth ideal for LLMs and generative AI.

H100 GPU/H200 GPU: Enterprise-grade for heavy training/inference; up to 80GB HBM3 memory; supports multi-instance GPU (MIG) for isolation.

A100 GPU (40/80GB): Versatile for deep learning; excels in mixed-precision workloads; common in 4-8 GPU clusters.

V100: Reliable mid-tier for legacy ML; 32GB HBM2; cost-effective for data analytics.

L40S/L4: Optimized for rendering/visualization; L40S handles real-time inference with 48GB GDDR6.

T4: Entry-level for lightweight inference; power-efficient at 70W TDP; suits edge-like cloud tasks.

These GPUs integrate with NVLink for inter-GPU communication, ensuring low-latency scaling in clusters.

Cyfuture Cloud diversifies beyond NVIDIA for specialized or cost-sensitive workloads. AMD MI300X targets high-memory AI training, while Intel GAUDI 2 focuses on scalable HPC.

|

GPU Model |

Key Strengths |

Memory |

Best For |

Configurations |

|

AMD MI300X |

Massive HBM3e capacity (192GB) |

192GB |

Large-scale training, data analytics |

Single to 8-GPU nodes |

|

Intel GAUDI 2 |

Ethernet-based scaling; open ecosystem |

96GB HBM2e |

HPC, distributed ML |

Multi-node clusters |

These options reduce vendor lock-in and leverage frameworks like ROCm for AMD.

GPUaaS supports spot/preemptible (cheapest, interruptible), dedicated instances (consistent for production), and serverless (auto-scaling, pay-per-use). One-click deployment provisions instances in under 60 seconds with pre-installed ML stacks.

Pricing is hourly/monthly, up to 75% cheaper than AWS/Azure, with data center in India for low latency in Delhi. Scale from 1 GPU to clusters with AMD EPYC/Intel Xeon CPUs and 2TB DDR5 RAM.

Tailored for AI training, LLM inference, rendering, and HPC, these GPUs enable 5x faster model deployment. Benefits include no CapEx, 99.99% uptime, Kubernetes integration, and hybrid cloud support.

Cyfuture Cloud's GPUaaS delivers comprehensive options like NVIDIA H100/A100 suites, AMD MI300X, and Intel GAUDI 2, empowering scalable AI without hardware ownership. This cost-efficient, flexible service accelerates innovation for startups to enterprises, with seamless provisioning and robust support.

Q1: How do I select the right GPU as a Service for my workload?

A: Match H100/A100 for training heavy LLMs; T4/L4 for inference; MI300X for memory-intensive tasks. Use Cyfuture's console for benchmarks and workload estimators.

Q2: What interconnects and storage are available?

A: NVLink/InfiniBand for NVIDIA; high-speed NVMe SSDs up to 100TB/node; Ethernet for GAUDI. Pre-configured for distributed training.

Q3: Are bare metal GPU Cloud server offered?

A: Yes, dedicated bare metal with 4-8 GPUs per node for full control, bypassing virtualization overhead.

Q4: How does pricing work?

A: Pay-as-you-go (hourly from spot rates), reserved for discounts; up to 70% savings vs. hyperscalers. Transparent calculator on cyfuture.ai.

Let’s talk about the future, and make it happen!

By continuing to use and navigate this website, you are agreeing to the use of cookies.

Find out more