Server

Colocation

Server

Colocation

CDN

Network

CDN

Network

Linux Cloud

Hosting

Linux Cloud

Hosting

VMware Public

Cloud

VMware Public

Cloud

Multi-Cloud

Hosting

Multi-Cloud

Hosting

Cloud

Server Hosting

Cloud

Server Hosting

Kubernetes

Kubernetes

API Gateway

API Gateway

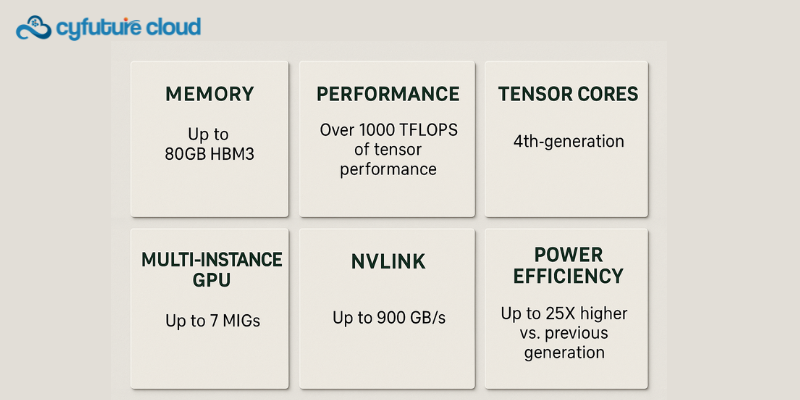

The NVIDIA A100 Tensor Core GPU is a high-performance data center accelerator engineered to power modern AI, machine learning, deep learning, data analytics, and High-Performance Computing (HPC) workloads. Built on NVIDIA’s Ampere architecture, the A100 delivers massive compute density, exceptional memory bandwidth, and advanced virtualization through Multi-Instance GPU (MIG) technology.

With up to 80GB of HBM2e memory, third-generation Tensor Cores, and ultra-fast NVLink interconnect, the NVIDIA A100 GPU is designed to handle large AI models, complex simulations, and high-throughput inference at scale. Cyfuture Cloud provides enterprise-grade cloud access to NVIDIA A100 Tensor Core GPU, enabling organizations to deploy AI and HPC workloads without the cost and complexity of on-premise infrastructure.

The NVIDIA A100 GPU was launched in 2020 as the successor to the Volta-based V100 GPU. It is purpose-built for data center in India and scientific environments, delivering a significant leap in performance, scalability, and efficiency for AI and HPC applications.

The A100 features 6,912 CUDA cores and 432 third-generation Tensor Cores, supporting multiple precision formats such as FP64, TF32, FP16, BF16, INT8, and INT4. This versatility allows organizations to optimize workloads ranging from AI training and inference to advanced analytics and scientific simulations.

Available in 40GB HBM2 and 80GB HBM2e variants

Memory bandwidth exceeding 2 TB/s, ideal for large datasets and models

Up to 19.5 TFLOPS FP32

Up to 312 TFLOPS FP16/BF16

Up to 624 TFLOPS with structured sparsity, enabling faster AI training and inference

Accelerate AI workloads across multiple precisions

Deliver up to 20× performance improvement compared to previous GPU generations

Partition a single A100 GPU into up to seven isolated GPU instances

Ideal for cloud environments, multi-tenant workloads, and efficient resource utilization

Third-generation NVLink enables up to 600 GB/s GPU-to-GPU bandwidth

Supports large-scale, distributed AI and HPC workloads

Thermal Design Power (TDP) ranges from 250W to 400W, depending on form factor

The Multi-Instance GPU (MIG) capability allows enterprises to run multiple workloads on a single physical GPU without performance interference. This significantly improves utilization and cost efficiency in cloud and AI-as-a-service environments.

The third-generation Tensor Cores enable mixed-precision computing, allowing faster processing while maintaining model accuracy. Combined with CUDA, NVIDIA GPU Cloud server (NGC) containers, and optimized AI frameworks, the A100 simplifies development and deployment for advanced workloads.

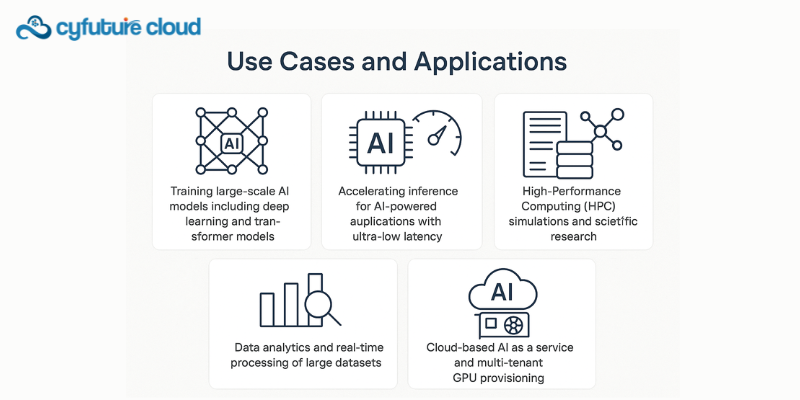

The NVIDIA A100 GPU is widely adopted across industries for compute-intensive tasks, including:

AI training and inference, including large language models and computer vision

Big data analytics and real-time data processing

Scientific simulations and HPC research

Cloud-based AI services and GPU virtualization

Enterprise machine learning platforms

Cyfuture Cloud offers NVIDIA A100 GPUs as part of its high-performance cloud infrastructure, giving enterprises on-demand access to world-class GPU acceleration. With support for MIG-based GPU partitioning, high-speed NVLink networking, and optimized AI frameworks, Cyfuture Cloud enables scalable and secure AI and HPC deployments.

By eliminating the need for large capital investments, Cyfuture Cloud allows businesses to focus on innovation while benefiting from low-latency connectivity, enterprise-grade security, and flexible GPU consumption models.

Q: What makes NVIDIA A100 better than previous GPUs?

A: The A100 delivers up to 20× higher AI performance, improved memory bandwidth, and MIG technology, enabling efficient GPU sharing and higher utilization.

Q: What memory options are available with the A100 GPU?

A: NVIDIA A100 GPUs are available in 40GB HBM2 and 80GB HBM2e configurations, with the 80GB version ideal for large AI models.

Q: Can multiple A100 GPUs be connected together?

A: Yes. Using third-generation NVLink, multiple A100 GPUs can be interconnected with up to 600 GB/s bandwidth for large distributed workloads.

The NVIDIA A100 GPU remains one of the most powerful and versatile data center GPUs for AI, data analytics, and high-performance computing. Its advanced Tensor Cores, massive memory capacity, and Multi-Instance GPU as a Service technology make it a preferred choice for enterprises and researchers worldwide.

With Cyfuture Cloud’s NVIDIA A100 GPU offerings, organizations gain scalable, cost-effective access to industry-leading GPU performance—accelerating innovation, reducing deployment complexity, and enabling next-generation AI applications with confidence.

Let’s talk about the future, and make it happen!

By continuing to use and navigate this website, you are agreeing to the use of cookies.

Find out more