Server

Colocation

Server

Colocation

CDN

Network

CDN

Network

Linux Cloud

Hosting

Linux Cloud

Hosting

VMware Public

Cloud

VMware Public

Cloud

Multi-Cloud

Hosting

Multi-Cloud

Hosting

Cloud

Server Hosting

Cloud

Server Hosting

Kubernetes

Kubernetes

API Gateway

API Gateway

The rapid evolution of artificial intelligence has pushed computing infrastructure to its limits. As large language models (LLMs), generative AI, and data-intensive workloads continue to expand, organizations need NVIDIA H200 GPU that deliver not only raw compute power but also massive memory bandwidth and efficiency. The NVIDIA H200 GPU represents a significant step forward in this direction.

Built on NVIDIA H200 GPU Hopper architecture, the H200 GPU is designed specifically for AI training, AI inference, large language models, and high-performance computing (HPC). With a major upgrade in memory capacity and bandwidth, it addresses one of the biggest bottlenecks in modern AI systems—data movement at scale.

At Cyfuture Cloud, the NVIDIA H200 GPU is available through flexible GPU as a Service (GPUaaS) models, enabling enterprises to deploy cutting-edge AI infrastructure without heavy upfront investment.

The NVIDIA H200 GPU is a high-performance Tensor Core GPU from the Hopper family, purpose-built for enterprise AI and data center workloads. Its most notable advancement is the introduction of 141 GB of HBM3e memory with up to 4.8 TB/s memory bandwidth, nearly doubling memory capacity compared to the H100.

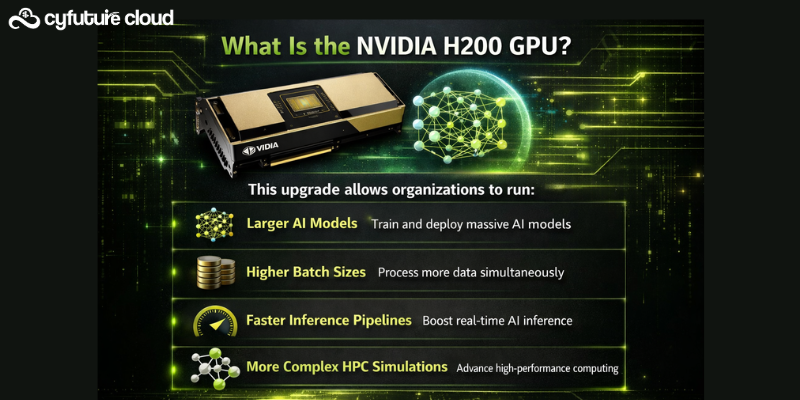

This upgrade allows organizations to run:

Larger AI models

Higher batch sizes

Faster inference pipelines

More complex HPC simulations

In real-world scenarios, the H200 delivers up to 2× faster LLM inference compared to the H100 gpu, making it ideal for production-grade AI systems.

The H200 builds on the strengths of Hopper architecture while addressing memory-bound AI workloads.

CUDA Cores: 16,896

Tensor Cores: 528 (4th Generation)

Memory: 141 GB HBM3e

Memory Bandwidth: Up to 4.8 TB/s

Tensor Performance: Up to 3,958 TFLOPS (FP8 / INT8)

Form Factors: SXM and PCIe

TDP: Up to 700W (SXM variant)

The enhanced Transformer Engine dynamically optimizes precision (FP8, FP16, FP32) for transformer-based models, improving both performance and efficiency.

Modern AI workloads are increasingly memory-constrained, not compute-constrained. Large models with hundreds of billions—or even trillions—of parameters require fast access to vast datasets.

The H200’s HBM3e memory enables:

Lower latency during inference

Fewer memory swaps

Faster training convergence

Support for models up to 1.8 trillion parameters

This makes the H200 particularly effective for generative AI, multimodal models, and real-time AI inference.

Cyfuture Cloud integrates the NVIDIA H200 GPU into enterprise-grade GPU clusters, offering it through GPU as a Service (GPUaaS).

On-demand H200 GPU access

Multi-GPU clusters with NVLink (up to 900 GB/s)

MIG support (up to 7 isolated GPU instances per card)

Secure, India-based data centers

99.99% uptime SLA

No CapEx—pay only for what you use

This approach allows startups, enterprises, and research teams to scale AI workloads without purchasing hardware costing $30,000–$46,000 per GPU.

The H200 GPU on Cyfuture Cloud is optimized for:

Large Language Models (LLMs) – training and inference

Generative AI – text, image, video, and multimodal models

AI Inference at Scale – low-latency production workloads

High-Performance Computing (HPC) – simulations, modeling

Enterprise AI Pipelines – fine-tuning, MLOps, deployment

While compute performance remains similar, the key advantage of the H200 lies in memory.

| Feature | H100 | H200 |

|---|---|---|

| Memory | 80 GB HBM3 | 141 GB HBM3e |

| Bandwidth | ~3.35 TB/s | Up to 4.8 TB/s |

| LLM Inference | Baseline | Up to 2× faster |

| Model Size Support | Large | Extremely large |

For memory-intensive AI workloads, the H200 offers significantly better cost-per-token efficiency.

The NVIDIA H200 GPU is a critical advancement for organizations building next-generation AI systems. Its unmatched memory capacity, bandwidth, and AI-optimized architecture make it ideal for large-scale inference, training, and HPC workloads.

With Cyfuture Cloud’s GPU as a Service, businesses gain immediate access to H200-powered infrastructure—without long procurement cycles or capital expenditure. This combination delivers faster time-to-value, lower total cost of ownership, and production-ready AI performance.

The NVIDIA H200 GPU is a Hopper-based Tensor Core GPU built for large-scale AI, large language models (LLMs), and high-performance computing (HPC). It features 141 GB of HBM3e memory and up to 4.8 TB/s memory bandwidth, enabling faster AI training and inference for memory-intensive workloads.

A physical NVIDIA H200 GPU typically costs between $30,000 and $46,000, depending on configuration. With Cyfuture Cloud GPU as a Service (GPUaaS), businesses can access H200 GPUs on a flexible, pay-as-you-use model without large upfront investment.

No. The H200 GPU is designed exclusively for enterprise AI, data centers, and HPC workloads. It is not optimized for gaming or consumer graphics applications.

The H200 offers nearly double the memory of the H100 (141 GB vs 80 GB) and significantly higher memory bandwidth (up to 4.8 TB/s vs ~3.35 TB/s). While compute performance is similar, the H200 delivers 1.9×–2× faster LLM inference due to reduced memory bottlenecks.

Yes, the NVIDIA MX150 can run GTA 5, but only at low graphics settings and lower resolutions.

RTX GPUs are better than GTX GPUs because they support ray tracing, DLSS, and newer architectures, offering higher performance and better efficiency.

The B200 GPU is newer and more powerful, but the H200 remains highly effective and widely deployed for enterprise AI, LLM inference, and HPC workloads, especially where memory capacity is critical.

“Best” depends on the use case. Enterprise AI GPUs like the H200 differ significantly from gaming GPUs, which prioritize frame rates and visuals rather than AI throughput.

An RTX 3060 can reach 240 FPS only in lightweight or esports titles at low or medium settings. It is not guaranteed for all modern games.

For enterprise AI and data center in India workloads, GPUs like the NVIDIA H200 and newer architectures lead in performance and scalability.

For AI and HPC workloads, yes. The A100 is designed for data centers, while the RTX 4090 is optimized for gaming and creative workloads.

The RTX 40 series offers better performance, power efficiency, and advanced features compared to the RTX 30 series.

No, the Quadro 2000 does not officially support 4K output.

FPS depends on the game, resolution, and settings. Gaming GPUs vary widely, while enterprise GPUs like the H200 are not designed for FPS-based workloads.

16GB is better, especially for modern applications, higher resolutions, and AI or data-intensive workloads.

The H200 matches the H100’s compute performance but doubles memory to 141 GB HBM3e and increases bandwidth to 4.8 TB/s, resulting in 1.9×–2× faster LLM inference and improved cost-per-token efficiency.

The H200 is ideal for generative AI training and inference, large language models (such as 70B+ parameter models), HPC simulations, and multimodal AI workloads. Cyfuture Cloud provides scalable H200 clusters for seamless deployment.

Yes. The NVIDIA H200 GPU, released in mid-2024, is available via Cyfuture Cloud GPUaaS, offering flexible hourly and monthly pricing without hardware ownership.

Yes. The H200 supports Multi-Instance GPU (MIG) with up to 7 isolated GPU instances per card, enabling secure multi-tenancy for development, testing, and production environments on Cyfuture Cloud.

The NVIDIA H200 GPU does not have a fixed retail price because it is mainly sold to enterprises, cloud providers, and data centers. On average, the cost of an H200 GPU can range from $30,000 to $40,000 per unit, depending on configuration, memory, and vendor agreements. Pricing may vary further when purchased as part of GPU cloud or AI infrastructure solutions.

For AI, machine learning, and high-performance computing (HPC) workloads, the H200 GPU is currently one of the most powerful GPUs available. It is specifically designed for large language models (LLMs), generative AI, and data-intensive workloads. However, it is not meant for everyday consumer or gaming use.

Yes, the H200 GPU is one of NVIDIA’s latest data-center GPUs, built on the Hopper architecture. It is an advanced upgrade over the H100, offering significantly higher memory bandwidth with HBM3e, making it ideal for modern AI workloads.

Technically, yes—but it’s not recommended. The H200 GPU is designed for enterprise AI and data center tasks, not gaming. It lacks gaming-optimized drivers and features found in consumer GPUs like NVIDIA RTX series. For gaming, RTX GPUs deliver far better value and performance.

There have been instances where NVIDIA experienced major market capitalization drops due to broader market volatility or investor reactions. However, claims like “NVIDIA lost $279 billion in one day” are often exaggerated or taken out of context. Stock market fluctuations do not directly impact the performance or demand for GPUs like the H200.

The answer depends on use case:

For AI and data centers: NVIDIA H200 GPU

For gaming: NVIDIA RTX 4090

For professional visualization: NVIDIA RTX 6000 Ada

Each category has its own “No. 1” GPU.

The H200 GPU is more powerful than the H100, especially for AI workloads. It offers:

Higher memory bandwidth

Improved performance for LLMs

Better efficiency for data-intensive tasks

This makes H200 the preferred choice for next-generation AI training and inference.

RTX (NVIDIA) and RX (AMD) serve different audiences:

RTX GPUs excel in ray tracing, AI features, and professional workloads.

RX GPUs often provide strong performance at competitive pricing for gaming.

For AI and enterprise use, NVIDIA RTX and H-series GPUs are generally preferred.

Yes, the NVIDIA RTX 6000 is a real professional-grade GPU designed for 3D rendering, AI development, and enterprise workloads. It is different from the H200 GPU, as RTX 6000 targets visualization and creative professionals rather than large-scale AI training.

Let’s talk about the future, and make it happen!

By continuing to use and navigate this website, you are agreeing to the use of cookies.

Find out more