Server

Colocation

Server

Colocation

CDN

Network

CDN

Network

Linux Cloud

Hosting

Linux Cloud

Hosting

VMware Public

Cloud

VMware Public

Cloud

Multi-Cloud

Hosting

Multi-Cloud

Hosting

Cloud

Server Hosting

Cloud

Server Hosting

Kubernetes

Kubernetes

API Gateway

API Gateway

The NVIDIA H200 GPU in 2025 is priced approximately between $30,000 and $46,000 per unit, depending on the variant and purchase volume. On cloud platforms, NVIDIA H200 GPU rental costs vary from about $3.72 to $10.60 per GPU hour, with some providers offering single-GPU access and others selling only multi-GPU nodes. Availability remains competitive, with early adoption by cloud giants and emerging providers, including Cyfuture Cloud, which offers tailored H200 GPU services for AI and deep learning workloads.

The NVIDIA H200 GPU is the next-generation AI and high-performance computing GPU based on NVIDIA's Hopper architecture. It succeeds the H100, enhancing performance, memory, and power efficiency to meet the intensive demands of modern AI workloads like large language models (LLMs), scientific simulations, and transformer-based models such as GPT, LLaMA, and Gemini.

Typically, the H200 comes in two configurations: high-density multi-GPU boards (SXM form factor) and the single GPU NVL PCIe cards. Its launch in mid-2024 has seen rapid uptake, with cloud providers and enterprises eager to leverage its enhanced capabilities.

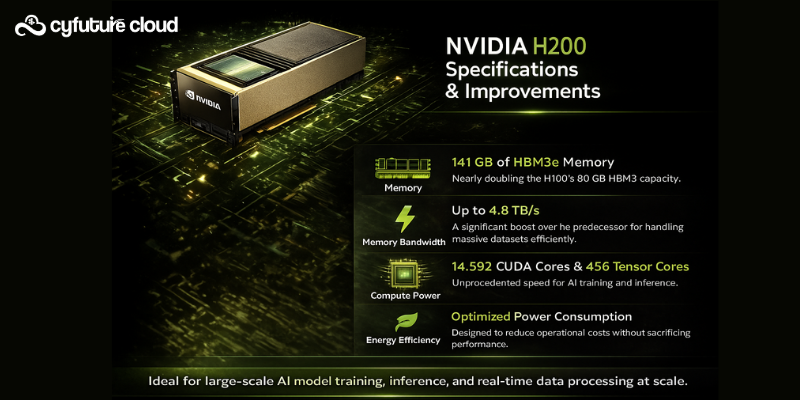

Memory: 141 GB of HBM3e memory, nearly doubling the H100’s 80 GB HBM3 capacity.

Memory Bandwidth: Up to 4.8 TB/s, a significant boost over the predecessor for handling massive datasets efficiently.

Compute Power: 14,592 CUDA cores and 456 Tensor Cores provide unprecedented speed for AI training and inference.

Energy Efficiency: Optimized power consumption designed to reduce operational costs without sacrificing performance.

These specifications make the H200 ideal for large-scale AI model training, inference, and real-time data processing at scale.

Typical price range for a single NVIDIA H200 GPU unit is around $30,000 to $46,000.

Multi-GPU boards vary significantly: 4-GPU boards come around $175,000, while 8-GPU setups can exceed $300,000 depending on configuration.

The H200 is more expensive than the H100 due to increased memory size and bandwidth improvements.

Custom server boards with multiple H200 GPUs can cost between $100,000 and $350,000.

Cloud rental prices fluctuate between $3.72 to $10.60 per GPU hour depending on the provider and region.

Some providers bundle GPUs in 8-GPU nodes, while certain platforms like Jarvislabs and Cyfuture Cloud offer single-H200 GPU rentals, enabling flexibility for developers and researchers.

Prices are influenced by demand, regional pricing differences, and whether the GPU is accessed on-demand or as spot instances.

Major cloud providers like AWS, Microsoft Azure, Google Cloud, and Oracle Cloud have begun offering NVIDIA H200 GPUs in their data centers. However, most publicly available offerings come as multi-GPU nodes, sometimes restricting individual single-GPU access.

Emerging cloud providers, including Cyfuture Cloud, offer competitive and flexible H200 GPU rental plans tailored to deep learning, AI, gaming simulations, and data analytics.

Supply chain constraints have caused some availability challenges, but the H200 is now widely accessible globally, with supply expected to stabilize by late 2025.

For enterprises or developers hesitant about capital expenditure, cloud rental options remain attractive, with Cyfuture Cloud providing hands-on access to cutting-edge GPU technology backed by robust infrastructure.

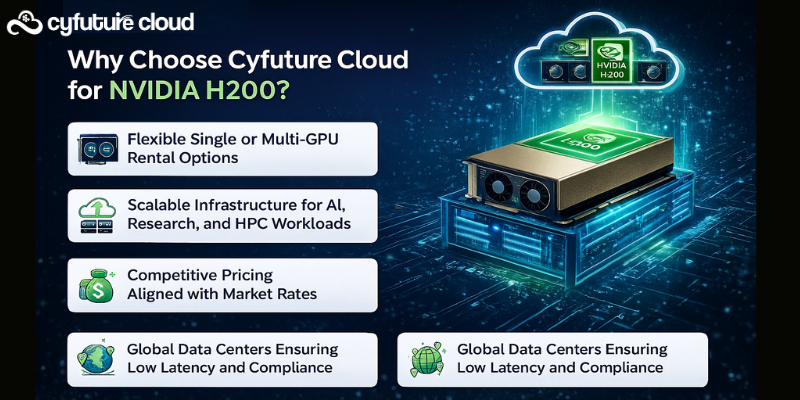

Cyfuture Cloud stands out as a strategic partner for businesses seeking access to NVIDIA H200 GPUs combined with responsive support and cost-efficient plans. With customized GPUaaS (GPU as a Service) offerings, Cyfuture Cloud enables enterprises to accelerate AI training and inference without heavy upfront investments.

Flexible single or multi-GPU rental options.

Scalable infrastructure for AI, research, and HPC workloads.

Competitive pricing aligned with market rates.

Global data centers ensuring low latency and compliance.

The H200 offers nearly double the memory (141 GB HBM3e vs. 80 GB HBM3), higher memory bandwidth (4.8 TB/s), and similar compute cores but with improved energy efficiency and better performance on large AI models.

Buying H200 GPUs involves high capital expense ranging $30k to $46k per unit plus infrastructure costs. Renting from cloud providers like Cyfuture Cloud offers flexibility, no maintenance burden, and pay-as-you-go pricing ideal for variable workloads or prototyping.

Industries involved in AI research, machine learning, scientific computing, gaming simulations, and big data analytics gain major benefits from the H200's enhanced computing capabilities.

The NVIDIA H200 GPU stands as the forefront AI accelerator in 2025, delivering groundbreaking memory capacity, bandwidth, and compute power. Its pricing reflects these advances, with purchase costs ranging from $30,000 to upwards of $300,000 for multi GPU Cloud server and rental rates varying per usage and provider. As availability expands, platforms like Cyfuture Cloud are making it simpler and cost-effective for organizations of all sizes to access this powerful technology on flexible terms, ensuring businesses remain competitive in an increasingly AI-driven world.

Let’s talk about the future, and make it happen!

By continuing to use and navigate this website, you are agreeing to the use of cookies.

Find out more