Get 69% Off on Cloud Hosting : Claim Your Offer Now!

- Products

-

Compute

Compute

- Predefined TemplatesChoose from a library of predefined templates to deploy virtual machines!

- Custom TemplatesUse Cyfuture Cloud custom templates to create new VMs in a cloud computing environment

- Spot Machines/ Machines on Flex ModelAffordable compute instances suitable for batch jobs and fault-tolerant workloads.

- Shielded ComputingProtect enterprise workloads from threats like remote attacks, privilege escalation, and malicious insiders with Shielded Computing

- GPU CloudGet access to graphics processing units (GPUs) through a Cyfuture cloud infrastructure

- vAppsHost applications and services, or create a test or development environment with Cyfuture Cloud vApps, powered by VMware

- Serverless ComputingNo need to worry about provisioning or managing servers, switch to Serverless Computing with Cyfuture Cloud

- HPCHigh-Performance Computing

- BaremetalBare metal refers to a type of cloud computing service that provides access to dedicated physical servers, rather than virtualized servers.

-

Storage

Storage

- Standard StorageGet access to low-latency access to data and a high level of reliability with Cyfuture Cloud standard storage service

- Nearline StorageStore data at a lower cost without compromising on the level of availability with Nearline

- Coldline StorageStore infrequently used data at low cost with Cyfuture Cloud coldline storage

- Archival StorageStore data in a long-term, durable manner with Cyfuture Cloud archival storage service

-

Database

Database

- MS SQLStore and manage a wide range of applications with Cyfuture Cloud MS SQL

- MariaDBStore and manage data with the cloud with enhanced speed and reliability

- MongoDBNow, store and manage large amounts of data in the cloud with Cyfuture Cloud MongoDB

- Redis CacheStore and retrieve large amounts of data quickly with Cyfuture Cloud Redis Cache

-

Automation

Automation

-

Containers

Containers

- KubernetesNow deploy and manage your applications more efficiently and effectively with the Cyfuture Cloud Kubernetes service

- MicroservicesDesign a cloud application that is multilingual, easily scalable, easy to maintain and deploy, highly available, and minimizes failures using Cyfuture Cloud microservices

-

Operations

Operations

- Real-time Monitoring & Logging ServicesMonitor & track the performance of your applications with real-time monitoring & logging services offered by Cyfuture Cloud

- Infra-maintenance & OptimizationEnsure that your organization is functioning properly with Cyfuture Cloud

- Application Performance ServiceOptimize the performance of your applications over cloud with us

- Database Performance ServiceOptimize the performance of databases over the cloud with us

- Security Managed ServiceProtect your systems and data from security threats with us!

- Back-up As a ServiceStore and manage backups of data in the cloud with Cyfuture Cloud Backup as a Service

- Data Back-up & RestoreStore and manage backups of your data in the cloud with us

- Remote Back-upStore and manage backups in the cloud with remote backup service with Cyfuture Cloud

- Disaster RecoveryStore copies of your data and applications in the cloud and use them to recover in the event of a disaster with the disaster recovery service offered by us

-

Networking

Networking

- Load BalancerEnsure that applications deployed across cloud environments are available, secure, and responsive with an easy, modern approach to load balancing

- Virtual Data CenterNo need to build and maintain a physical data center. It’s time for the virtual data center

- Private LinkPrivate Link is a service offered by Cyfuture Cloud that enables businesses to securely connect their on-premises network to Cyfuture Cloud's network over a private network connection

- Private CircuitGain a high level of security and privacy with private circuits

- VPN GatewaySecurely connect your on-premises network to our network over the internet with VPN Gateway

- CDNGet high availability and performance by distributing the service spatially relative to end users with CDN

-

Media

-

Analytics

Analytics

-

Security

Security

-

Network Firewall

- DNATTranslate destination IP address when connecting from public IP address to a private IP address with DNAT

- SNATWith SNAT, allow traffic from a private network to go to the internet

- WAFProtect your applications from any malicious activity with Cyfuture Cloud WAF service

- DDoSSave your organization from DoSS attacks with Cyfuture Cloud

- IPS/ IDSMonitor and prevent your cloud-based network & infrastructure with IPS/ IDS service by Cyfuture Cloud

- Anti-Virus & Anti-MalwareProtect your cloud-based network & infrastructure with antivirus and antimalware services by Cyfuture Cloud

- Threat EmulationTest the effectiveness of cloud security system with Cyfuture Cloud threat emulation service

- SIEM & SOARMonitor and respond to security threats with SIEM & SOAR services offered by Cyfuture Cloud

- Multi-Factor AuthenticationNow provide an additional layer of security to prevent unauthorized users from accessing your cloud account, even when the password has been stolen!

- SSLSecure data transmission over web browsers with SSL service offered by Cyfuture Cloud

- Threat Detection/ Zero DayThreat detection and zero-day protection are security features that are offered by Cyfuture Cloud as a part of its security offerings

- Vulnerability AssesmentIdentify and analyze vulnerabilities and weaknesses with the Vulnerability Assessment service offered by Cyfuture Cloud

- Penetration TestingIdentify and analyze vulnerabilities and weaknesses with the Penetration Testing service offered by Cyfuture Cloud

- Cloud Key ManagementSecure storage, management, and use of cryptographic keys within a cloud environment with Cloud Key Management

- Cloud Security Posture Management serviceWith Cyfuture Cloud, you get continuous cloud security improvements and adaptations to reduce the chances of successful attacks

- Managed HSMProtect sensitive data and meet regulatory requirements for secure data storage and processing.

- Zero TrustEnsure complete security of network connections and devices over the cloud with Zero Trust Service

- IdentityManage and control access to their network resources and applications for your business with Identity service by Cyfuture Cloud

-

-

Compute

- Solutions

-

Solutions

Solutions

-

Cloud

Hosting

Cloud

Hosting

-

VPS

Hosting

VPS

Hosting

-

GPU Cloud

-

Dedicated

Server

Dedicated

Server

-

Server

Colocation

Server

Colocation

-

Backup as a Service

Backup as a Service

-

CDN

Network

CDN

Network

-

Window

Cloud Hosting

Window

Cloud Hosting

-

Linux

Cloud Hosting

Linux

Cloud Hosting

-

Managed Cloud Service

-

Storage as a Service

-

VMware

Public Cloud

VMware

Public Cloud

-

Multi-Cloud

Hosting

Multi-Cloud

Hosting

-

Cloud

Server Hosting

Cloud

Server Hosting

-

Bare

Metal Server

Bare

Metal Server

-

Virtual

Machine

Virtual

Machine

-

Magento

Hosting

Magento

Hosting

-

Remote Backup

-

DevOps

DevOps

-

Kubernetes

Kubernetes

-

Cloud

Storage

Cloud

Storage

-

NVMe Hosting

-

DR

as s Service

DR

as s Service

-

-

Solutions

- Marketplace

- Pricing

- Resources

- Resources

-

By Product

Use Cases

-

By Industry

- Company

-

Company

Company

-

Company

Unleashing Intelligent Applications with AI Inference as a Service and Serverless Inferencing

Table of Contents

- What is AI Inference?

- The Traditional Inference Deployment Problem

- What is AI Inference as a Service?

- Key Features of AI Inference as a Service:

- Enter Serverless Inferencing: Inference on Demand

- Why AI Inference as a Service + Serverless Inferencing is a Perfect Match

- Use Cases Enabled by Serverless AI Inference

- Benefits of Choosing Cyfuture Cloud for AI Inference as a Service

- Best Practices for AI Inference in Production

- The Future of AI is Serverless

- Final Thoughts

Artificial Intelligence (AI) has transcended its buzzword status to become an integral part of modern business operations. From chatbots and fraud detection to real-time personalization and autonomous systems, AI is reshaping industries. But while developing AI models is one thing, efficiently deploying and scaling them is another challenge altogether.

That’s where AI Inference as a Service and Serverless Inferencing come into the picture.

These cloud-native innovations are helping businesses unlock the true potential of their AI investments—without worrying about infrastructure management, scalability, or cost overheads. At Cyfuture Cloud, we’re bringing these futuristic capabilities to the present, empowering organizations to run AI workloads faster, more affordably, and more flexibly than ever before.

In this blog, we’ll break down what AI inference is, why it matters, and how AI Inference as a Service combined with serverless inferencing is a game-changer for AI-powered applications.

What is AI Inference?

Before diving into the “as-a-service” model, let’s understand what AI inference actually is.

In simple terms, AI model development has two major phases:

- Training – where a model learns patterns from large datasets using powerful cloud computing resources (e.g., GPUs or TPUs).

- Inference – where the trained model makes predictions on new, unseen data.

While training happens infrequently and can be done offline, inference is what powers real-world applications—like recognizing faces in a photo, recommending products on an ecommerce website, or detecting spam emails.

Inference needs to be low-latency, cost-effective, and scalable, especially when serving thousands or millions of users in real-time.

The Traditional Inference Deployment Problem

Traditionally, inference workloads were deployed on dedicated servers or virtual machines (VMs). While this setup works, it introduces several challenges:

- Resource Wastage: Servers are often underutilized, leading to unnecessary costs.

- Complex Infrastructure Management: You need to provision, scale, and monitor infrastructure manually.

- Scalability Bottlenecks: Handling unpredictable workloads requires over-provisioning or complex auto-scaling mechanisms.

- Time-to-Market Delays: Engineering efforts are focused more on deployment logistics than model improvement.

To address these pain points, modern cloud platforms like Cyfuture Cloud are turning to AI Inference as a Service powered by Serverless Inferencing.

What is AI Inference as a Service?

AI Inference as a Service (IaaS) is a cloud-based offering that allows businesses to deploy, manage, and scale AI models for inference without having to worry about the underlying hardware or software infrastructure.

It abstracts away the complexity of serving AI models and offers simple APIs or endpoints to run predictions.

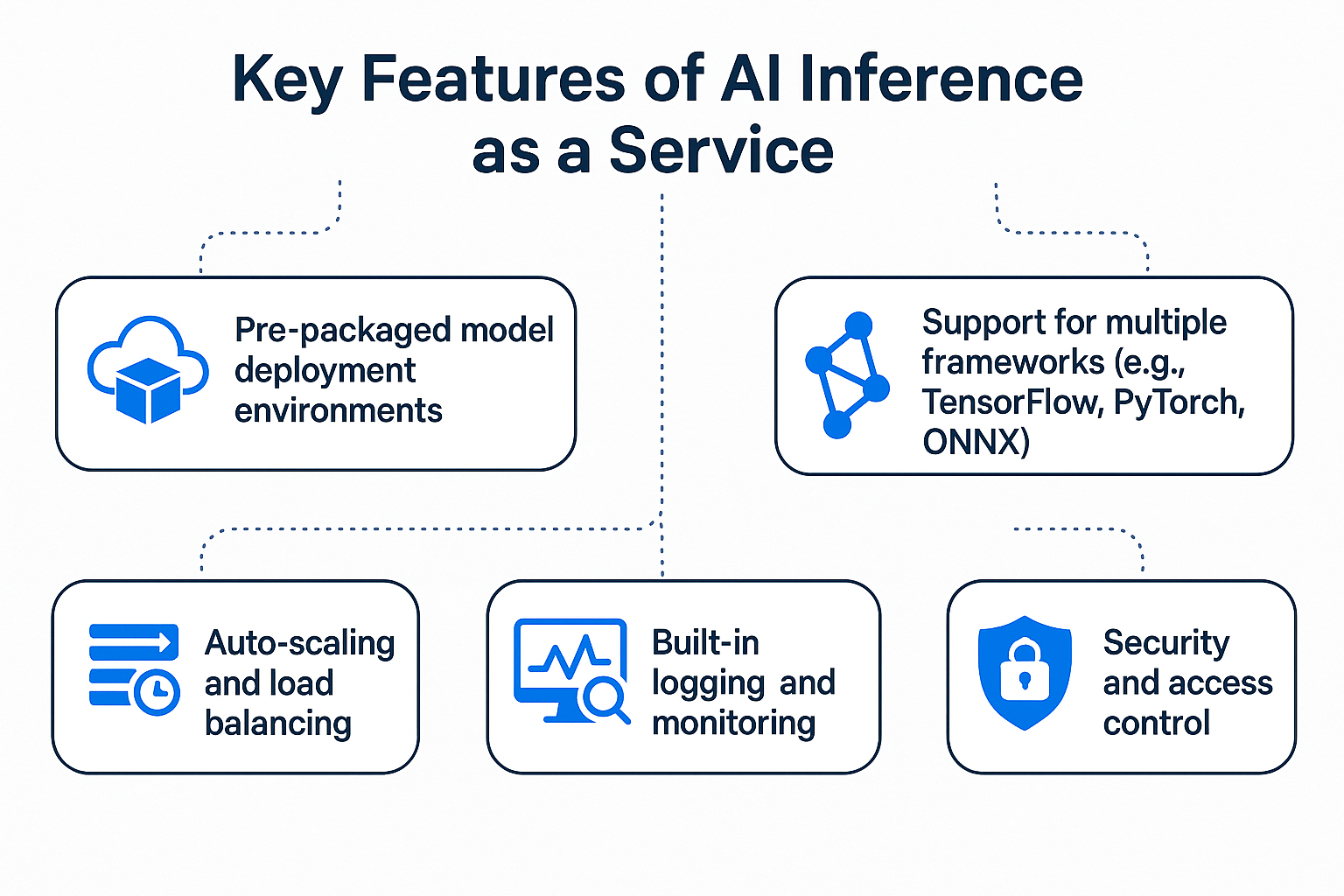

Key Features of AI Inference as a Service:

Pre-packaged model deployment environments

Support for multiple frameworks (e.g., TensorFlow, PyTorch, ONNX)

Auto-scaling and load balancing

Built-in logging and monitoring

Security and access control

Cyfuture Cloud’s AI Inference as a Service allows enterprises to integrate machine learning models into applications—fast, securely, and at scale.

Enter Serverless Inferencing: Inference on Demand

Serverless inferencing is the next evolution in AI model deployment.

Serverless computing allows code or models to run without managing or provisioning servers. You only pay for the compute time you consume. No idle charges. No setup headaches.

In the context of AI, serverless inferencing enables you to:

- Automatically scale up during high demand

- Scale down to zero when idle

- Pay-per-inference or per-request

This is especially useful for sporadic or unpredictable workloads—like an AI chatbot receiving queries during business hours or an anomaly detection model used during audits.

Why AI Inference as a Service + Serverless Inferencing is a Perfect Match

When you combine the simplicity of AI Inference as a Service with the elasticity of Serverless Inferencing, you get a powerful solution that checks all the boxes:

|

Feature |

Traditional Inference |

AI Inference as a Service + Serverless |

|

Deployment Time |

Days to Weeks |

Minutes |

|

Infrastructure Management |

Manual |

Fully abstracted |

|

Cost Model |

Always-on servers |

Pay-as-you-go |

|

Scalability |

Manual scaling required |

Auto-scaling built-in |

|

Integration |

Complex APIs |

REST/gRPC endpoints |

|

Monitoring |

Separate setup |

Built-in dashboards |

With Cyfuture Cloud’s platform, deploying a model is as easy as uploading it to the console or via CLI, selecting compute preferences, and obtaining a secure endpoint.

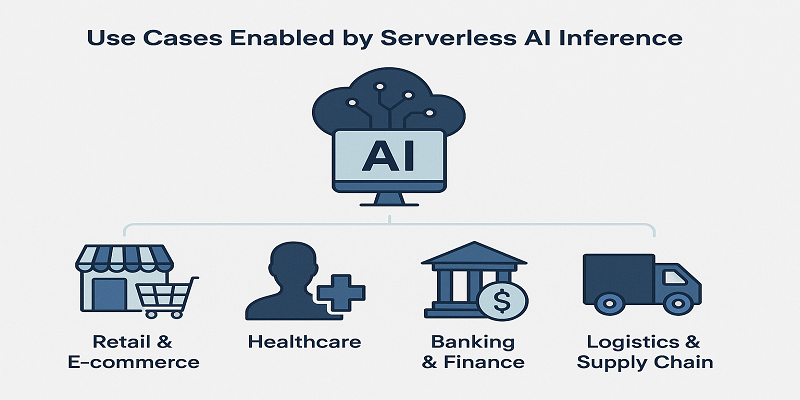

Use Cases Enabled by Serverless AI Inference

Here’s how industries are leveraging this new model:

Retail & E-commerce

- Personalized recommendations in real-time

- Visual product search and tagging

- Customer sentiment analysis from reviews

Healthcare

- Image classification for radiology

- Real-time patient risk scoring

- Voice-to-text medical transcription

Banking & Finance

- Fraud detection at the point of transaction

- Credit scoring and risk prediction

- Automated document processing

Logistics & Supply Chain

- Route optimization using predictive models

- Demand forecasting

- Quality inspection using computer vision

Each of these workloads benefits from low-latency, highly available inferencing that automatically scales with demand—and that’s exactly what serverless AI inference delivers.

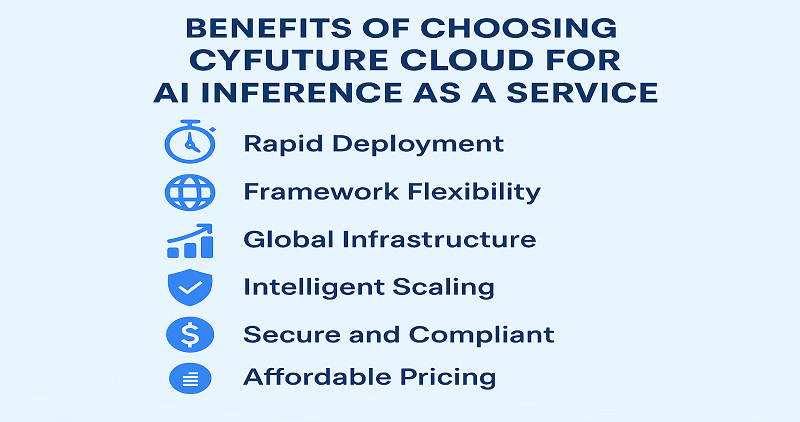

Benefits of Choosing Cyfuture Cloud for AI Inference as a Service

At Cyfuture Cloud, we’ve designed our AI cloud infrastructure to empower innovation while reducing friction. Here’s what sets us apart:

Rapid Deployment

Upload your model in any popular format and get a ready-to-use endpoint within minutes.

Framework Flexibility

Support for TensorFlow, PyTorch, scikit-learn, ONNX, Hugging Face Transformers, and more.

Global Infrastructure

Leverage our globally distributed cloud network for geo-optimized inference.

Intelligent Scaling

Automatically scale your inference workloads up or down based on usage patterns.

Secure and Compliant

We offer enterprise-grade security, role-based access, and compliance with GDPR, HIPAA, and other standards.

Affordable Pricing

Transparent, usage-based billing with no hidden fees—ideal for startups and enterprises alike.

Best Practices for AI Inference in Production

To maximize the efficiency and performance of your AI as a Service deployment, follow these best practices:

Optimize Your Model

Use quantization, pruning, or distillation techniques to reduce model size and latency.

Batch Inference Where Possible

For high-throughput scenarios, batch multiple inputs to maximize GPU utilization.

Use Caching for Repetitive Inputs

If certain queries repeat frequently, cache their outputs to reduce inference calls.

Monitor Latency and Throughput

Cyfuture Cloud’s built-in dashboards help you track performance in real-time.

Implement Rate Limiting and Access Control

Protect your inference endpoints from abuse and ensure only authorized services can access them.

The Future of AI is Serverless

As AI continues to proliferate across industries, the need for efficient, cost-effective deployment becomes more critical. Serverless inferencing not only meets that need but future-proofs your AI strategy.

You don’t have to maintain idle infrastructure, wrestle with load balancers, or worry about latency spikes. You focus on building better models—we take care of the rest.

With Cyfuture Cloud’s AI Inference as a Service, you get the agility of serverless with the power of enterprise-grade AI infrastructure. Whether you’re deploying a chatbot, fraud detection system, or advanced image classifier, our platform helps you go from model to market in record time.

Final Thoughts

In today’s competitive digital landscape, the winners are those who can act on insights quickly and intelligently. With AI Inference as a Service and Serverless Inferencing, you’re not just running models—you’re delivering smart, real-time experiences to users across the globe.

At Cyfuture Cloud, we make this transformation seamless.

Recent Post

Stay Ahead of the Curve.

Join the Cloud Movement, today!

© Cyfuture, All rights reserved.

Send this to a friend

Pricing

Calculator

Pricing

Calculator

Power

Power

Utilities

Utilities VMware

Private Cloud

VMware

Private Cloud VMware

on AWS

VMware

on AWS VMware

on Azure

VMware

on Azure Service

Level Agreement

Service

Level Agreement