Table of Contents

- Are You Ready for the Quantum Revolution in Data Center Infrastructure?

- What is Quantum Computing and Why Does It Matter for Data Centers?

- The Infrastructure Challenge: Why Traditional Data Centers Can’t Support Quantum Systems

- The Rise of Hybrid Quantum-Classical Architecture

- Data Center Design Transformations: The New Blueprint

- Energy Efficiency: A Double-Edged Sword

- Security Implications: The Post-Quantum Era

- Market Reality: Timelines and Adoption Rates

- The Quantum Talent Gap: A Critical Challenge

- Cyfuture Cloud’s Position in the Quantum Future

- Future Outlook: The Next Decade

- Overcoming Implementation Challenges

- Transform Your Infrastructure with Cyfuture Cloud’s Future-Ready Solutions

- Frequently Asked Questions (FAQs)

- 1. What is quantum computing and how does it differ from classical computing?

- 2. Do data centers need to completely rebuild to accommodate quantum computers?

- 3. How much does quantum computing infrastructure cost?

- 4. When will quantum computers become practical for business use?

- 5. What cooling systems do quantum computers require?

- 6. How does quantum computing impact data center security?

- 7. What industries will benefit most from quantum computing?

- 8. Can small and medium businesses access quantum computing?

- 9. What is the energy consumption comparison between quantum and classical data centers?

Are You Ready for the Quantum Revolution in Data Center Infrastructure?

Here’s the thing:

Data Center architecture is experiencing its most significant transformation since the dawn of cloud computing. Quantum computing—once confined to theoretical physics labs—is now forcing a complete reimagination of how we design, build, and operate data centers. This isn’t just an incremental upgrade; it’s a fundamental paradigm shift that will redefine computational infrastructure for the next generation.

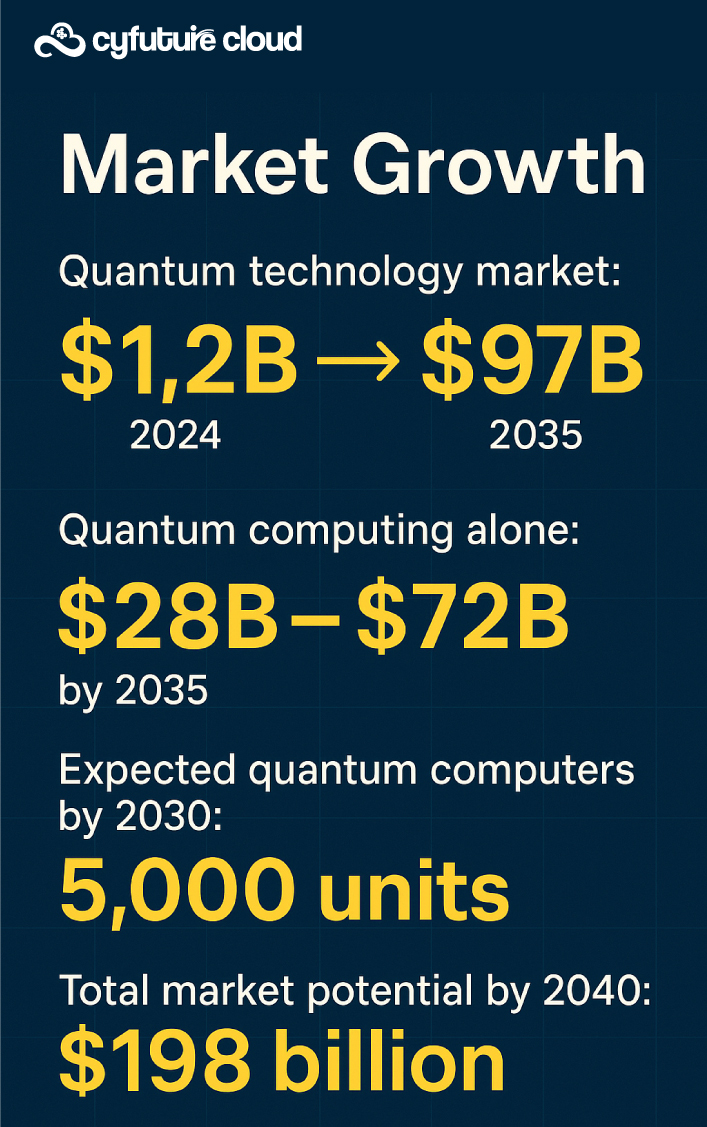

The stakes couldn’t be higher. By 2035, quantum computing could generate between $28 billion to $72 billion in market value, with estimates projecting up to 5,000 working quantum computers by 2030. For data center operators, enterprises, and cloud providers like Cyfuture Cloud, understanding this shift isn’t optional—it’s imperative.

What is Quantum Computing and Why Does It Matter for Data Centers?

Quantum computing is a paradigm shift in computing that harnesses the principles of quantum mechanics to perform calculations. Unlike classical computers, which use bits representing either a 0 or a 1, quantum computers employ qubits that can exist in a superposition state, meaning they can be 0, 1, or both simultaneously.

Think of it this way:

Where traditional processors work sequentially through problems, quantum systems explore multiple solutions simultaneously. This isn’t just faster computing—it’s an entirely different computational model that excels at optimization, molecular modeling, cryptography, and machine learning tasks that would take classical supercomputers millennia to solve.

But here’s the catch:

These extraordinary capabilities come with extraordinary infrastructure demands.

The Infrastructure Challenge: Why Traditional Data Centers Can’t Support Quantum Systems

Cryogenic Cooling Requirements

Let’s be clear about something:

Most quantum computing systems must operate at extremely low temperatures, from several millikelvin to 10 Kelvin, to maintain qubit states and prevent errors caused by thermal noise and vibrations. IBM’s dilution refrigerators operate at approximately 25 millikelvin—that’s colder than outer space.

Consider these numbers:

Dilution refrigerator systems can bring qubits to about 50 millikelvins above absolute zero, requiring sophisticated multi-stage cooling processes. A typical lab-scale dilution refrigerator system requires 5–10 kW of electrical power, while large-scale systems used in quantum computing data centers consume significantly more.

The energy equation is sobering:

A quantum data center may complete a task twice as fast as a traditional one but consume ten times more energy due to its stringent cryogenic cooling requirements.

Electromagnetic Interference Protection

Quantum systems are extraordinarily sensitive.

Qubits are sensitive to electromagnetic interference (EMI), and even small disruptions can cause errors. Data Center facilities must implement comprehensive EMI shielding that goes far beyond conventional practices. This means specialized room design, grounded cabling systems, and isolation from external radio frequencies.

Physical Space and Modular Design

Here’s where it gets interesting:

IBM plans to release a demonstration architecture by linking together at least three 462-qubit processors, each called Flamingo, into a 1386-qubit system using long-range couplers through cryogenic cables around a meter long. IBM Quantum Starling, to be built in a new IBM Quantum Data Center in Poughkeepsie, New York by 2029, is expected to perform 20,000 times more operations than today’s quantum computers.

The physical footprint isn’t trivial either. Honeywell’s 32-qubit H2 system reportedly occupies around 200 square feet of space, and as systems scale, they’ll require even more room for interconnected quantum processors and supporting infrastructure.

The Rise of Hybrid Quantum-Classical Architecture

Now, here’s the game-changer:

Quantum computers won’t replace classical systems—they’ll augment them.

AWS notes quantum systems shouldn’t be looked at as replacement devices for traditional classical supercomputers, but rather as coprocessors or accelerators. Customers will run large HPC workloads where some portions run on CPUs, GPUs, and QPUs.

Real-World Hybrid Deployments

The hybrid model is already becoming reality:

IBM’s Quantum System One machine is colocated on the Rensselaer Polytechnic Institute campus with the university’s Artificial Intelligence Multiprocessing Optimized System, a classical supercomputer. Similarly, Riken, a national scientific research institute in Japan, has connected an IBM Quantum System Two with its Fugaku supercomputer.

“We see quantum computing as a hybrid cloud technology that works hand in hand with currently existing compute technologies, such as CPUs and GPUs,” says Jan Goetz, CEO of IQM Quantum Computers, in a discussion about quantum data center integration.

The Network-Aware Orchestrator

To enable efficient execution of distributed quantum computing jobs, researchers have introduced the concept of a network-aware quantum orchestrator, a framework designed to bridge physical-layer architectures with higher-level quantum applications in quantum data center networks.

This orchestration layer becomes critical for:

- Dynamic resource allocation between quantum and classical systems

- Workload routing based on computational requirements

- Real-time error correction coordination

- Optimal utilization of limited quantum resources

Data Center Design Transformations: The New Blueprint

Modular and Scalable Systems

IBM’s Quantum System Two, unveiled in November 2021, represents the first example of how a system could be built to scale up using a modular design. Given the one-meter constraint on connectivity between qubit systems, clusters of systems could be arranged in a cylindrical fashion, where each cylinder comprises a fridge with a number of interconnected 300-400-qubit systems.

Think of it as LEGO blocks for quantum computing:

Each module contains 300-400 qubits, and these modules can be linked together to create increasingly powerful systems. IBM’s new architecture uses qLDPC error-correcting codes to reduce physical qubit overhead by up to 90 percent, making scalability more practical.

Specialized Infrastructure Requirements

Data centers integrating quantum systems need:

- Advanced Power Distribution: Equal1 launched Bell-1 in March 2025, the first quantum system designed for HPC environments, featuring compact rack-mountable design with 1600 W power consumption

- Cryogenic Infrastructure: Bluefors delivered and installed 18 advanced KIDE cryogenic systems at Japan’s G-QuAT center in May 2025, providing milli-kelvin cooling to support >1,000-qubit quantum computing

- Low-Vibration Environments: Quantum processors are incredibly sensitive to mechanical vibrations that can cause computational errors

- Quantum Networking Capabilities: Scalable quantum data center networks leverage dynamic, circuit-switched quantum networks to facilitate efficient entanglement distribution between quantum processing units using shared resources

Energy Efficiency: A Double-Edged Sword

Let’s address the elephant in the room:

Quantum computing promises dramatic computational efficiency while simultaneously demanding massive cooling energy. Data centers are on track to require an estimated 848 terawatt-hours by 2030, and up to 40 percent of that total will go toward cooling alone.

But there’s a silver lining:

Quantum computing has the potential to significantly reduce energy consumption in data centers because quantum systems can process complex calculations faster while using less energy for actual computation, even though the cooling requirements offset some of these gains.

Innovative Cooling Solutions

Research continues to push boundaries:

Researchers propose deploying quantum processors on stratospheric High Altitude Platforms, leveraging −50 °C ambient temperatures to reduce cooling demands by 21 percent and support 30 percent more qubits than terrestrial quantum data centers.

While this specific approach remains experimental, it demonstrates the industry’s commitment to solving the energy equation.

Security Implications: The Post-Quantum Era

Here’s something that keeps CISOs awake at night:

The potential arrival of Q-Day, when quantum computers become powerful enough to break current encryption standards and critical digital infrastructure worldwide, represents a major shift in security.

Quantum-Safe Infrastructure

Forward-thinking organizations are preparing now:

IBM’s quantum-safe cryptographic algorithms were officially published as part of the first post-quantum cryptography standards by NIST in August 2024. Data centers must implement post-quantum cryptographic protocols before large-scale quantum computers become available.

The quantum communication market is expected to reach $10.5 billion to $14.9 billion by 2035, representing a CAGR of 22 to 25 percent, driven largely by security concerns.

Market Reality: Timelines and Adoption Rates

Let’s cut through the hype:

There’s considerable debate about quantum computing timelines. NVIDIA CEO Jensen Huang stated in January 2025 that practical quantum computers are 15 to 30 years away from being useful, arguing that classical computing, particularly with advancements in AI and GP cloud server systems, would remain dominant for the foreseeable future.

However:

Microsoft, backed by DARPA in its US2QC program, has demonstrated the first topological qubit, with a system already containing eight qubits on a chip designed to scale to one million.

Investment Trends

The numbers tell a compelling story:

In 2024, private and public investors poured nearly $2.0 billion into quantum technology start-ups worldwide, a 50 percent increase compared to $1.3 billion in 2023. The quantum computing market is projected to reach between $90-170 billion by 2040, representing a phenomenal 36-48% CAGR.

The Quantum Talent Gap: A Critical Challenge

Here’s a sobering reality:

Projections suggest the industry may face a significant shortfall, with demand potentially reaching 10,000 qualified workers by 2025, while the available workforce is expected to be less than half that number at under 5,000 people.

By 2030, the quantum computing industry is expected to create 250,000 new jobs, and by 2035, projections show up to 840,000 new jobs created.

This talent shortage affects:

- Quantum algorithm developers

- Quantum systems engineers

- Cryogenic specialists

- Quantum-classical integration architects

Cyfuture Cloud’s Position in the Quantum Future

As India’s fastest-growing cloud infrastructure provider, Cyfuture Cloud recognizes that quantum readiness isn’t about immediate deployment—it’s about strategic preparation.

Our approach involves:

- Infrastructure Flexibility: Building modular data center designs that can accommodate future quantum systems without complete overhauls

- Hybrid Architecture Expertise: Developing orchestration capabilities that will seamlessly integrate quantum coprocessors when they become commercially viable

- Security Posture: Implementing post-quantum cryptographic standards now to protect customer data against future quantum threats

- Energy Optimization: Investing in advanced cooling technologies and renewable energy sources to support the power-intensive future of quantum-classical hybrid computing

Cyfuture Cloud’s commitment to staying at the forefront of infrastructure innovation positions our clients to leverage quantum capabilities as they mature, without the risk of premature investment or technological obsolescence.

Future Outlook: The Next Decade

Let’s look at what’s coming:

IBM Quantum Starling will use 200 logical qubits to run 100 million quantum operations and serve as the foundation for IBM Quantum Blue Jay, designed to scale to 2,000 logical qubits and 1 billion operations.

To represent the computational state of an IBM Starling would require the memory of more than a quindecillion (10^48) of the world’s most powerful supercomputers—that’s computational power beyond human comprehension.

The Road to Quantum Advantage

“In 2024, the quantum technology industry saw a shift from growing quantum bits to stabilizing qubits—and that marks a turning point. It signals to mission-critical industries that quantum technology could soon become a safe and reliable component of their technology infrastructure,” according to McKinsey’s latest Quantum Technology Monitor.

From Google’s achievements in quantum error correction to Oxford Ionics’ world records in qubit performance, 2024 was a year marked by incredible milestones for the quantum computing industry, with companies now able to answer fundamental questions about scalability and error correction with a resounding yes.

Overcoming Implementation Challenges

Cost Considerations

A quantum computer, including the refrigeration unit, can cost up to $100 million at scale. For most organizations, cloud-based quantum computing access represents the most practical entry point.

Integration Complexity

Quantum hardware struggles in a noisy data center, doesn’t naturally fit in with software architecture, and there is a technical language barrier between quantum and classical computing engineers.

Successful integration requires:

- Cross-functional teams bridging quantum and classical expertise

- Robust testing environments

- Iterative deployment strategies

- Strong vendor partnerships

Transform Your Infrastructure with Cyfuture Cloud’s Future-Ready Solutions

The quantum revolution isn’t coming—it’s already here.

Data Center architecture is undergoing its most profound transformation in decades. The United Nations has designated 2025 the International Year of Quantum Science and Technology, celebrating 100 years since the initial development of quantum mechanics, and the industry momentum is undeniable.

Organizations that prepare now will lead their industries tomorrow.

At Cyfuture Cloud, we’re building the bridge between today’s classical infrastructure and tomorrow’s quantum-enhanced computing landscape. Our state-of-the-art data centers, combined with our commitment to innovation, position you for success regardless of how quickly quantum technologies mature.

Don’t wait for the future to arrive unprepared.

Partner with Cyfuture Cloud today to develop your quantum readiness strategy, implement post-quantum security measures, and build the flexible, scalable infrastructure that will power your organization through the next computational revolution.

The question isn’t whether quantum computing will transform data centers—it’s whether your infrastructure will be ready when it does.

Frequently Asked Questions (FAQs)

1. What is quantum computing and how does it differ from classical computing?

Quantum computing uses quantum bits (qubits) that can exist in multiple states simultaneously (superposition) and be interconnected (entanglement), allowing quantum computers to process multiple calculations in parallel. Classical computers use binary bits (0 or 1) and process information sequentially. This fundamental difference enables quantum computers to solve certain complex problems exponentially faster than classical systems.

2. Do data centers need to completely rebuild to accommodate quantum computers?

No, complete rebuilds aren’t necessary. Most quantum integration will happen through hybrid architectures where quantum processing units serve as specialized coprocessors alongside classical systems. However, dedicated areas will need upgrades for cryogenic cooling, EMI shielding, vibration dampening, and specialized power delivery. The extent of modifications depends on whether you’re hosting quantum systems on-premise or accessing them via cloud.

3. How much does quantum computing infrastructure cost?

Costs vary dramatically based on scale. Individual quantum computers with refrigeration units can cost up to $100 million at enterprise scale. However, most organizations will initially access quantum computing through cloud services (Quantum-as-a-Service), which requires minimal infrastructure investment. As the technology matures and economies of scale develop, costs are expected to decrease significantly.

4. When will quantum computers become practical for business use?

Timeline estimates vary considerably. Conservative estimates suggest 15-30 years for widespread practical applications, while optimistic projections indicate significant business value within 5-10 years for specific use cases. Current consensus suggests we’ll see early commercial applications in optimization, drug discovery, and financial modeling by 2030, with broader adoption following throughout the 2030s.

5. What cooling systems do quantum computers require?

Quantum computers need cryogenic cooling systems, typically dilution refrigerators, that maintain temperatures between 10-50 millikelvin (near absolute zero). These multi-stage systems use helium-3 and helium-4 isotopes to achieve ultra-low temperatures. A typical lab-scale system requires 5-10 kW of electrical power, while large-scale data center installations consume significantly more. The cooling energy often exceeds the computational energy by orders of magnitude.

6. How does quantum computing impact data center security?

Quantum computing presents both threats and solutions. Large-scale quantum computers could break current RSA and ECC encryption (the “Q-Day” scenario). However, quantum technology also enables quantum key distribution (QKD) and quantum-safe cryptography. Organizations should implement post-quantum cryptographic standards now, as defined by NIST in 2024, to protect data against future quantum attacks.

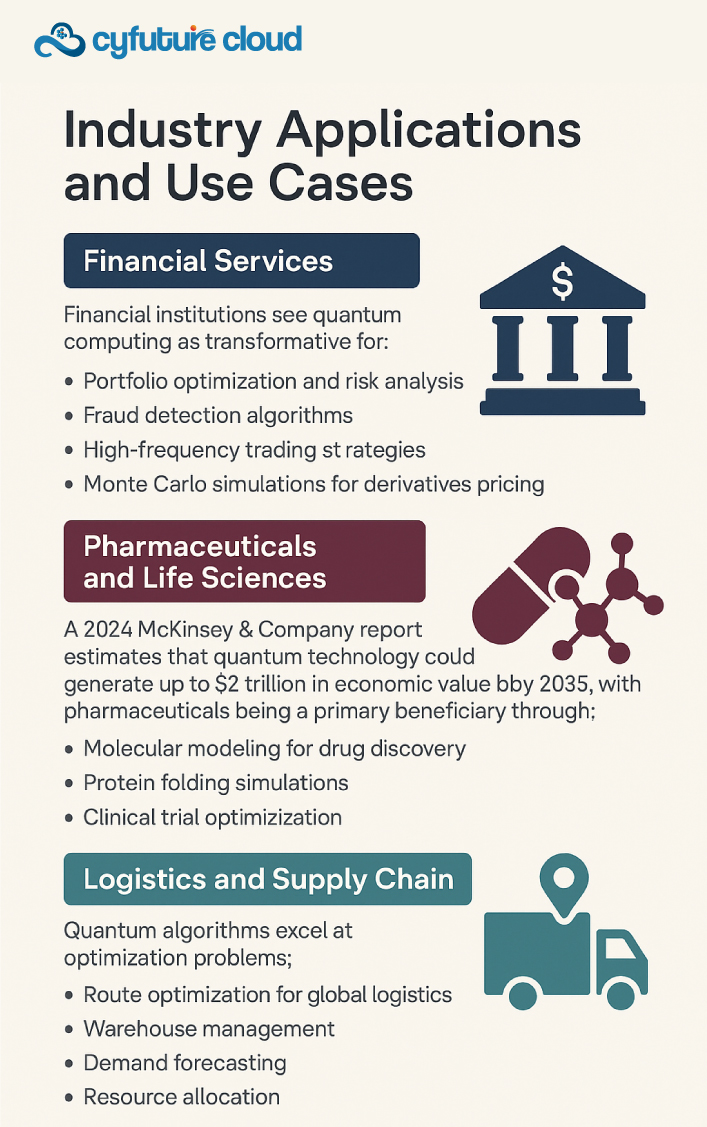

7. What industries will benefit most from quantum computing?

Four industries show the highest potential for quantum advantage: pharmaceuticals/life sciences (molecular modeling and drug discovery), financial services (portfolio optimization and risk analysis), logistics/transportation (route optimization and supply chain management), and chemicals/materials science (molecular simulation). These industries involve complex optimization problems and molecular-level simulations where quantum computing excels.

8. Can small and medium businesses access quantum computing?

Yes, through Quantum-as-a-Service (QCaaS) cloud platforms offered by IBM, Google, Amazon (AWS Braket), Microsoft Azure Quantum, and other providers. These services allow businesses to access quantum processors remotely without investing in expensive infrastructure. This democratization of access enables SMBs to experiment with quantum algorithms and develop quantum-ready applications at minimal cost.

9. What is the energy consumption comparison between quantum and classical data centers?

The comparison is complex. While quantum computers can complete certain calculations using less computational energy, the cryogenic cooling requirements often result in 10x higher total energy consumption for equivalent tasks. However, for problems where quantum computers offer exponential speedup, the overall energy efficiency (energy per solution) may actually be superior. As cooling technologies improve, this equation will continue to evolve.

Recent Post

Send this to a friend

Server

Colocation

Server

Colocation CDN

Network

CDN

Network Linux

Cloud Hosting

Linux

Cloud Hosting Kubernetes

Kubernetes Pricing

Calculator

Pricing

Calculator

Power

Power

Utilities

Utilities VMware

Private Cloud

VMware

Private Cloud VMware

on AWS

VMware

on AWS VMware

on Azure

VMware

on Azure Service

Level Agreement

Service

Level Agreement