Get 69% Off on Cloud Hosting : Claim Your Offer Now!

- Products

-

Compute

Compute

- Predefined TemplatesChoose from a library of predefined templates to deploy virtual machines!

- Custom TemplatesUse Cyfuture Cloud custom templates to create new VMs in a cloud computing environment

- Spot Machines/ Machines on Flex ModelAffordable compute instances suitable for batch jobs and fault-tolerant workloads.

- Shielded ComputingProtect enterprise workloads from threats like remote attacks, privilege escalation, and malicious insiders with Shielded Computing

- GPU CloudGet access to graphics processing units (GPUs) through a Cyfuture cloud infrastructure

- vAppsHost applications and services, or create a test or development environment with Cyfuture Cloud vApps, powered by VMware

- Serverless ComputingNo need to worry about provisioning or managing servers, switch to Serverless Computing with Cyfuture Cloud

- HPCHigh-Performance Computing

- BaremetalBare metal refers to a type of cloud computing service that provides access to dedicated physical servers, rather than virtualized servers.

-

Storage

Storage

- Standard StorageGet access to low-latency access to data and a high level of reliability with Cyfuture Cloud standard storage service

- Nearline StorageStore data at a lower cost without compromising on the level of availability with Nearline

- Coldline StorageStore infrequently used data at low cost with Cyfuture Cloud coldline storage

- Archival StorageStore data in a long-term, durable manner with Cyfuture Cloud archival storage service

-

Database

Database

- MS SQLStore and manage a wide range of applications with Cyfuture Cloud MS SQL

- MariaDBStore and manage data with the cloud with enhanced speed and reliability

- MongoDBNow, store and manage large amounts of data in the cloud with Cyfuture Cloud MongoDB

- Redis CacheStore and retrieve large amounts of data quickly with Cyfuture Cloud Redis Cache

-

Automation

Automation

-

Containers

Containers

- KubernetesNow deploy and manage your applications more efficiently and effectively with the Cyfuture Cloud Kubernetes service

- MicroservicesDesign a cloud application that is multilingual, easily scalable, easy to maintain and deploy, highly available, and minimizes failures using Cyfuture Cloud microservices

-

Operations

Operations

- Real-time Monitoring & Logging ServicesMonitor & track the performance of your applications with real-time monitoring & logging services offered by Cyfuture Cloud

- Infra-maintenance & OptimizationEnsure that your organization is functioning properly with Cyfuture Cloud

- Application Performance ServiceOptimize the performance of your applications over cloud with us

- Database Performance ServiceOptimize the performance of databases over the cloud with us

- Security Managed ServiceProtect your systems and data from security threats with us!

- Back-up As a ServiceStore and manage backups of data in the cloud with Cyfuture Cloud Backup as a Service

- Data Back-up & RestoreStore and manage backups of your data in the cloud with us

- Remote Back-upStore and manage backups in the cloud with remote backup service with Cyfuture Cloud

- Disaster RecoveryStore copies of your data and applications in the cloud and use them to recover in the event of a disaster with the disaster recovery service offered by us

-

Networking

Networking

- Load BalancerEnsure that applications deployed across cloud environments are available, secure, and responsive with an easy, modern approach to load balancing

- Virtual Data CenterNo need to build and maintain a physical data center. It’s time for the virtual data center

- Private LinkPrivate Link is a service offered by Cyfuture Cloud that enables businesses to securely connect their on-premises network to Cyfuture Cloud's network over a private network connection

- Private CircuitGain a high level of security and privacy with private circuits

- VPN GatewaySecurely connect your on-premises network to our network over the internet with VPN Gateway

- CDNGet high availability and performance by distributing the service spatially relative to end users with CDN

-

Media

-

Analytics

Analytics

-

Security

Security

-

Network Firewall

- DNATTranslate destination IP address when connecting from public IP address to a private IP address with DNAT

- SNATWith SNAT, allow traffic from a private network to go to the internet

- WAFProtect your applications from any malicious activity with Cyfuture Cloud WAF service

- DDoSSave your organization from DoSS attacks with Cyfuture Cloud

- IPS/ IDSMonitor and prevent your cloud-based network & infrastructure with IPS/ IDS service by Cyfuture Cloud

- Anti-Virus & Anti-MalwareProtect your cloud-based network & infrastructure with antivirus and antimalware services by Cyfuture Cloud

- Threat EmulationTest the effectiveness of cloud security system with Cyfuture Cloud threat emulation service

- SIEM & SOARMonitor and respond to security threats with SIEM & SOAR services offered by Cyfuture Cloud

- Multi-Factor AuthenticationNow provide an additional layer of security to prevent unauthorized users from accessing your cloud account, even when the password has been stolen!

- SSLSecure data transmission over web browsers with SSL service offered by Cyfuture Cloud

- Threat Detection/ Zero DayThreat detection and zero-day protection are security features that are offered by Cyfuture Cloud as a part of its security offerings

- Vulnerability AssesmentIdentify and analyze vulnerabilities and weaknesses with the Vulnerability Assessment service offered by Cyfuture Cloud

- Penetration TestingIdentify and analyze vulnerabilities and weaknesses with the Penetration Testing service offered by Cyfuture Cloud

- Cloud Key ManagementSecure storage, management, and use of cryptographic keys within a cloud environment with Cloud Key Management

- Cloud Security Posture Management serviceWith Cyfuture Cloud, you get continuous cloud security improvements and adaptations to reduce the chances of successful attacks

- Managed HSMProtect sensitive data and meet regulatory requirements for secure data storage and processing.

- Zero TrustEnsure complete security of network connections and devices over the cloud with Zero Trust Service

- IdentityManage and control access to their network resources and applications for your business with Identity service by Cyfuture Cloud

-

-

Compute

- Solutions

-

Solutions

Solutions

-

Cloud

Hosting

Cloud

Hosting

-

VPS

Hosting

VPS

Hosting

-

GPU Cloud

-

Dedicated

Server

Dedicated

Server

-

Server

Colocation

Server

Colocation

-

Backup as a Service

Backup as a Service

-

CDN

Network

CDN

Network

-

Window

Cloud Hosting

Window

Cloud Hosting

-

Linux

Cloud Hosting

Linux

Cloud Hosting

-

Managed Cloud Service

-

Storage as a Service

-

VMware

Public Cloud

VMware

Public Cloud

-

Multi-Cloud

Hosting

Multi-Cloud

Hosting

-

Cloud

Server Hosting

Cloud

Server Hosting

-

Bare

Metal Server

Bare

Metal Server

-

Virtual

Machine

Virtual

Machine

-

Magento

Hosting

Magento

Hosting

-

Remote Backup

-

DevOps

DevOps

-

Kubernetes

Kubernetes

-

Cloud

Storage

Cloud

Storage

-

NVMe Hosting

-

DR

as s Service

DR

as s Service

-

-

Solutions

- Marketplace

- Pricing

- Resources

- Resources

-

By Product

Use Cases

-

By Industry

- Company

-

Company

Company

-

Company

What is the NVIDIA H100 GPU?

Table of Contents

The NVIDIA H100 GPU represents a monumental leap in artificial intelligence (AI) and high-performance computing (HPC). Designed with the revolutionary Hopper architecture, the H100 is built to handle the most complex computational tasks, including AI training, deep learning, data analytics, and large-scale simulations.

With an emphasis on performance, efficiency, and scalability, it offers groundbreaking advancements over its predecessor, the A100, making it the preferred choice for AI researchers, cloud providers, and enterprises looking to push the limits of machine intelligence.

As AI models become increasingly complex, the demand for powerful GPUs grows. The H100 meets this need by providing unmatched speed, memory bandwidth, and parallel processing capabilities.

Whether used for cloud computing, AI inference, or scientific research, the H100 sets a new industry standard, enabling faster training times, lower latency, and greater efficiency. This blog explores its architecture, key features, and real-world applications.

The NVIDIA H100: A New Era of Computing

Hopper Architecture: The Foundation of H100

The NVIDIA H100 GPU is powered by Hopper architecture, a successor to the Ampere architecture found in the A100. Named after computing pioneer Grace Hopper, this architecture introduces several cutting-edge enhancements designed to maximize AI and HPC workloads.

Key architectural advancements include:

- Transformer Engine: Designed to accelerate deep learning models, particularly large-scale Transformer-based architectures used in NLP (natural language processing) and generative AI.

- Fourth-generation Tensor Cores: Delivering 6x higher AI performance than the A100, optimizing mixed-precision computing with FP8, FP16, and TF32 support.

- Second-generation Multi-Instance GPU (MIG): Allows partitioning the GPU into multiple instances, enabling optimized resource utilization for cloud hosting providers and enterprise users.

- Confidential Computing: Enhanced security measures to protect AI cloud models and sensitive data in multi-tenant environments.

- High-Bandwidth Memory (HBM3): The H100 utilizes HBM3 memory for increased bandwidth and efficient data transfer, ensuring seamless performance across intensive workloads.

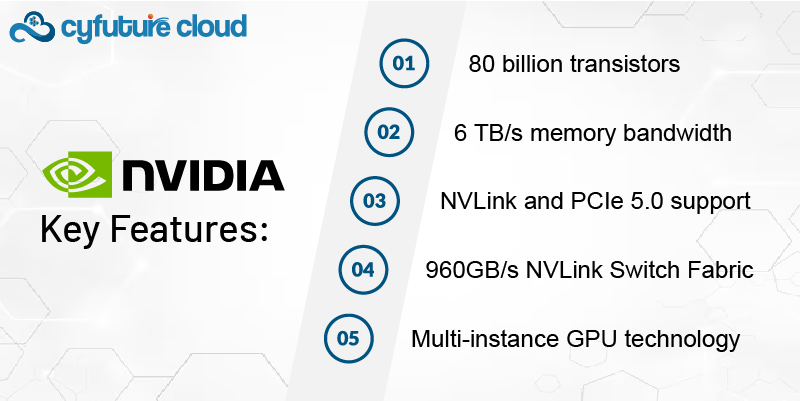

Key Features and Specifications

The NVIDIA H100 GPU comes packed with features that make it an industry leader in AI and HPC acceleration. Some of the standout specifications include:

- 80 billion transistors, manufactured using TSMC’s 4nm process technology.

- 60 teraflops of FP64 performance, making it ideal for scientific and engineering workloads.

- 6 TB/s memory bandwidth, powered by HBM3 memory.

- NVLink and PCIe 5.0 support, enhancing interconnect speeds for multi-GPU setups.

- 960GB/s NVLink Switch Fabric, allowing direct communication between multiple GPUs for faster processing.

- Multi-instance GPU (MIG) technology, ensuring optimal resource allocation for AI training and cloud-based services.

- FP8 and FP16 precision support, reducing memory requirements while maintaining accuracy in deep learning models.

Performance Comparison: H100 vs A100

The H100 outperforms its predecessor, the A100, in nearly every category. Here’s a quick comparison of their key performance metrics:

|

Feature |

NVIDIA H100 |

NVIDIA A100 |

|

Architecture |

Hopper |

Ampere |

|

Transistors |

80 billion |

54 billion |

|

Process Technology |

4nm |

7nm |

|

FP64 Performance |

60 teraflops |

19.5 teraflops |

|

Memory Bandwidth |

6 TB/s |

2 TB/s |

|

Tensor Core Performance |

6x faster |

Baseline |

|

NVLink Speed |

960GB/s |

600GB/s |

|

MIG Support |

7 instances |

7 instances |

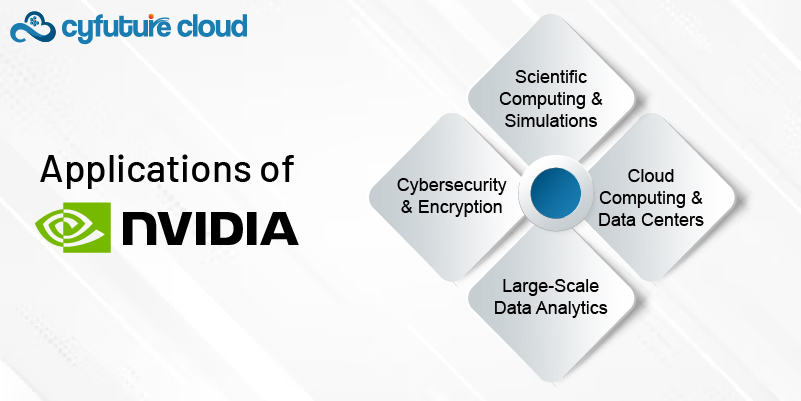

Real-World Applications of NVIDIA H100

The NVIDIA H100 GPU is designed for a wide range of high-performance computing applications. Some of the most impactful use cases include:

AI and Machine Learning

The H100 accelerates deep learning workloads, making it ideal for training massive AI models such as GPT, BERT, and DALL·E. Its FP8 support and Transformer Engine dramatically reduce training time and energy consumption, allowing researchers to develop sophisticated AI systems faster.

Scientific Computing and Simulations

From climate modeling to molecular dynamics, the H100 is the go-to GPU for scientific applications requiring extreme precision and computational power. It enables faster simulations, helping scientists and researchers analyze data more efficiently.

Cloud Computing and Data Centers

With MIG technology, the H100 is optimized for cloud environments, enabling multiple workloads to run simultaneously with improved security and efficiency. Cloud providers benefit from enhanced virtualization capabilities, allowing them to offer AI-powered services at scale.

Recommended Read : Want to Train AI Faster Than Ever? NVIDIA H100 is the Answer!

Large-Scale Data Analytics

Organizations dealing with big data can leverage the H100 to perform real-time analytics, predictive modeling, and advanced statistical computations. Its high memory bandwidth ensures seamless data processing, reducing bottlenecks in complex datasets.

Cybersecurity and Encryption

With Confidential Computing, the H100 provides advanced cyber security mechanisms that protect sensitive data during AI training and inference. This is especially critical for industries dealing with confidential information, such as finance, healthcare, and defense.

Why Choose the NVIDIA H100 for AI and HPC?

The NVIDIA H100 is a game-changer for enterprises and researchers looking for unparalleled performance and efficiency. Here’s why it stands out:

- Industry-Leading AI Acceleration: 6x faster performance compared to the A100, making it the best option for large-scale AI applications.

- Energy Efficiency: Despite its high performance, the H100 is optimized for power efficiency, reducing operational costs in data centers.

- Scalability and Flexibility: With MIG, NVLink, and PCIe 5.0 support, it seamlessly integrates into existing cloud infrastructures and multi-GPU configurations.

- Optimized for Next-Gen AI Models: Designed to handle future AI workloads, ensuring long-term value for organizations investing in AI.

Conclusion: Experience NVIDIA H100 with Cyfuture Cloud

The NVIDIA H100 GPU is redefining AI, HPC, and cloud computing, offering groundbreaking performance and efficiency. Whether you’re a researcher, developer, or enterprise, the H100 provides the power needed to accelerate innovation and drive progress in AI and data science.

To harness the full potential of NVIDIA H100 GPUs, consider Cyfuture Cloud, a leading cloud service provider offering high-performance GPU instances tailored for AI and machine learning workloads. With scalable infrastructure, cost-effective solutions, and enterprise-grade security, Cyfuture Cloud ensures seamless AI deployment and computing efficiency.

Unlock the power of NVIDIA H100 with Cyfuture Cloud today! Visit Cyfuture Cloud to explore our GPU cloud hosting solutions and take your AI projects to the next level.

Recent Post

Stay Ahead of the Curve.

Join the Cloud Movement, today!

© Cyfuture, All rights reserved.

Send this to a friend

Pricing

Calculator

Pricing

Calculator

Power

Power

Utilities

Utilities VMware

Private Cloud

VMware

Private Cloud VMware

on AWS

VMware

on AWS VMware

on Azure

VMware

on Azure Service

Level Agreement

Service

Level Agreement