Server

Colocation

Server

Colocation

CDN

Network

CDN

Network

Linux Cloud

Hosting

Linux Cloud

Hosting

VMware Public

Cloud

VMware Public

Cloud

Multi-Cloud

Hosting

Multi-Cloud

Hosting

Cloud

Server Hosting

Cloud

Server Hosting

Kubernetes

Kubernetes

API Gateway

API Gateway

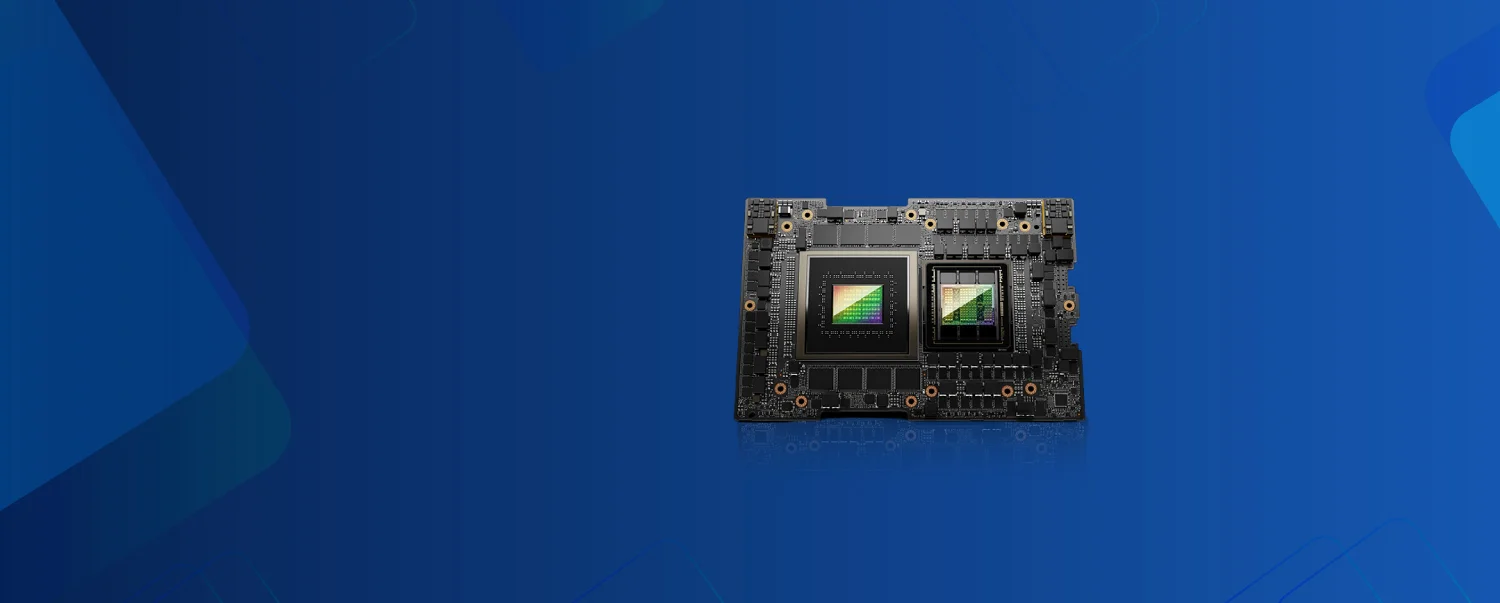

Power your most demanding AI, HPC, and data-intensive workloads with the GH200 GPU on Cyfuture Cloud. Experience advanced GPU-accelerated computing, ultra-fast memory bandwidth, and low-latency networking engineered for next-generation performance.

The GH200 GPU represents NVIDIA's Grace Hopper Superchip, combining a high-performance NVIDIA Hopper GPU with a 72-core NVIDIA Grace Arm-based CPU in a single package connected via ultra-fast NVLink-C2C interconnect delivering 900 GB/s bidirectional bandwidth. This coherent memory architecture eliminates traditional CPU-GPU data bottlenecks, featuring up to 96GB HBM3 GPU memory with 4 TB/s bandwidth alongside 480GB LPDDR5X CPU memory. Designed for trillion-parameter AI models and exascale HPC workloads, the GH200 GPU delivers up to 3,958 TFLOPS FP8 Tensor Core performance, making it ideal for training massive language models, scientific simulations, and climate modeling that require massive memory capacity and bandwidth.

The GH200 GPU refers to NVIDIA's Grace Hopper Superchip, a revolutionary compute platform that integrates the NVIDIA Grace CPU and Hopper GPU architectures into a single package. Connected through the high-speed NVLink-C2C interconnect delivering 900 GB/s bidirectional bandwidth, the GH200 GPU eliminates traditional CPU-GPU bottlenecks, creating a unified memory model ideal for massive AI training and HPC workloads. With up to 72 Arm Neoverse V2 CPU cores, 480GB LPDDR5X CPU memory, and 96GB HBM3 GPU memory, this superchip delivers unprecedented performance for trillion-parameter language models and complex scientific simulations.

72 Grace CPU cores and the Hopper GPU communicate at 900 GB/s via NVLink-C2C, delivering 7× higher bandwidth than PCIe Gen5 and enabling coherent memory access across a 576 GB shared memory pool.

CPU LPDDR5X (480 GB at 500 GB/s) and GPU HBM3 (96 GB at 4 TB/s) operate as a single addressable memory space, eliminating data copies and accelerating large AI model workloads.

Delivers up to 1 exaFLOPS FP8 AI performance and 3,958 TFLOPS tensor performance, optimized for trillion-parameter LLMs and advanced physics simulations.

Supports GPU partitioning for multiple concurrent workloads while maintaining full NVLink-C2C connectivity and memory coherence.

Multiple GH200 Superchips interconnect via NVLink domains, scaling up to 144 TB of shared GPU memory in DGX GH200 systems for massive parallel processing.

Runs NVIDIA AI Enterprise, CUDA, cuDNN, and the HPC SDK natively, supporting all major AI frameworks without requiring code changes.

NVIDIA GH200 GPU combines Grace CPU with Hopper GPU architecture, forming a unified superchip optimized for AI and high-performance computing workloads.

900 GB/s bidirectional CPU–GPU interconnect delivers up to 7× higher bandwidth than PCIe Gen5 for seamless, low-latency data transfer.

Up to 96 GB HBM3 GPU memory combined with 480 GB LPDDR5X CPU memory enables efficient processing of trillion-parameter AI models.

Up to 4 TB/s HBM3 GPU bandwidth supports rapid data access for large-scale model training and complex scientific simulations.

Grace CPU with 72 Arm Neoverse V2 cores delivers significantly higher performance-per-watt compared to traditional x86-based systems.

Up to 3,958 TFLOPS of FP8 tensor core performance accelerates generative AI training and inference for massive language models.

A single unified CPU–GPU memory space eliminates data copying and reduces latency for memory-intensive AI workloads.

Supports NVLink domain scaling across multiple GH200 systems, enabling exascale AI and HPC supercomputing clusters.

Cyfuture Cloud stands out as the premier choice for GH200 GPU deployments due to its seamless integration of NVIDIA's Grace Hopper Superchip with Data Center GPUs optimized for massive-scale AI and HPC workloads. The GH200 combines high-performance Arm-based Grace CPU cores with Hopper GPU architecture, delivering up to 288GB of high-bandwidth memory and 10 TB/s bandwidth in NVL2 configurations—perfect for trillion-parameter AI models that demand coherent CPU-GPU memory access. Cyfuture Cloud eliminates the complexity of on-premises infrastructure by offering scalable GH200 instances through its GPU Droplets platform, complete with NVLink-C2C interconnects operating at 900 GB/s for 7X faster data transfers than traditional PCIe Gen5 solutions.

Businesses choose Cyfuture Cloud for GH200 GPU hosting because of its enterprise-grade reliability, pay-as-you-go pricing, and full-stack NVIDIA software support including AI Enterprise and HPC SDK. Data Center GPUs like the GH200 excel in training massive LLMs, generative AI pipelines, and scientific simulations, with Cyfuture providing low-latency global data centers, automated orchestration, and zero upfront CapEx. This combination ensures organizations can rapidly deploy production-ready environments for complex workloads while maintaining data sovereignty and compliance through MeitY-empanelled facilities.

Thanks to Cyfuture Cloud's reliable and scalable Cloud CDN solutions, we were able to eliminate latency issues and ensure smooth online transactions for our global IT services. Their team's expertise and dedication to meeting our needs was truly impressive.

Since partnering with Cyfuture Cloud for complete managed services, Boloro Global has experienced a significant improvement in their IT infrastructure, with 24x7 monitoring and support, network security and data management. The team at Cyfuture Cloud provided customized solutions that perfectly fit our needs and exceeded our expectations.

Cyfuture Cloud's colocation services helped us overcome the challenges of managing our own hardware and multiple ISPs. With their better connectivity, improved network security, and redundant power supply, we have been able to eliminate telecom fraud efficiently. Their managed services and support have been exceptional, and we have been satisfied customers for 6 years now.

With Cyfuture Cloud's secure and reliable co-location facilities, we were able to set up our Certifying Authority with peace of mind, knowing that our sensitive data is in good hands. We couldn't have done it without Cyfuture Cloud's unwavering commitment to our success.

Cyfuture Cloud has revolutionized our email services with Outlook365 on Cloud Platform, ensuring seamless performance, data security, and cost optimization.

With Cyfuture's efficient solution, we were able to conduct our examinations and recruitment processes seamlessly without any interruptions. Their dedicated lease line and fully managed services ensured that our operations were always up and running.

Thanks to Cyfuture's private cloud services, our European and Indian teams are now working seamlessly together with improved coordination and efficiency.

The Cyfuture team helped us streamline our database management and provided us with excellent dedicated server and LMS solutions, ensuring seamless operations across locations and optimizing our costs.

The GH200 GPU is NVIDIA’s Grace Hopper Superchip combining a 72-core Arm-based Grace CPU with Hopper GPU architecture, connected via 900 GB/s NVLink-C2C for coherent memory access across up to 576 GB of fast memory.

GH200 GPU features 14,592 CUDA cores, 96–144 GB HBM3/HBM3e GPU memory with up to 4.9 TB/s bandwidth, 480 GB LPDDR5X CPU memory, and configurable 450–1000 W TDP.

Cyfuture Cloud delivers GH200 GPU via scalable GPU instances with pay-as-you-go pricing, NVLink interconnects, and full NVIDIA software stack support in MeitY-empanelled data centers.

GH200 GPU is optimized for trillion-parameter LLM training, generative AI inference, graph neural networks, HPC simulations, and memory-intensive workloads requiring unified CPU–GPU memory.

GH200 GPU delivers up to 7× faster CPU–GPU data transfers via NVLink-C2C, supports significantly more memory than H100, and enables higher inference efficiency for large models exceeding H100 memory limits.

Yes, GH200 GPU instances are available on Cyfuture Cloud with instant deployment, automated orchestration, and enterprise-grade reliability.

GH200 GPU supports 96 GB HBM3 or 144 GB HBM3e GPU memory along with 480 GB LPDDR5X CPU memory, providing up to 624 GB of coherent fast memory.

Cyfuture Cloud supports GH200 NVLink configurations scaling from dual-superchip setups to large NVLink Switch fabrics with up to 256 GH200 GPUs for exascale AI and HPC clusters.

GH200 GPU workloads are optimized using NVIDIA AI Enterprise, CUDA, cuDNN, HPC SDK, Magnum IO, and full support for PyTorch, TensorFlow, and enterprise AI frameworks.

Cyfuture Cloud offers cost-effective GH200 GPU hosting with zero CapEx, strong data sovereignty compliance, low-latency access, and infrastructure optimized for production-grade AI deployments.

If your site is currently hosted somewhere else and you need a better plan, you may always move it to our cloud. Try it and see!

Let’s talk about the future, and make it happen!

By continuing to use and navigate this website, you are agreeing to the use of cookies.

Find out more