Get 69% Off on Cloud Hosting : Claim Your Offer Now!

- Products

-

Compute

Compute

- Predefined TemplatesChoose from a library of predefined templates to deploy virtual machines!

- Custom TemplatesUse Cyfuture Cloud custom templates to create new VMs in a cloud computing environment

- Spot Machines/ Machines on Flex ModelAffordable compute instances suitable for batch jobs and fault-tolerant workloads.

- Shielded ComputingProtect enterprise workloads from threats like remote attacks, privilege escalation, and malicious insiders with Shielded Computing

- GPU CloudGet access to graphics processing units (GPUs) through a Cyfuture cloud infrastructure

- vAppsHost applications and services, or create a test or development environment with Cyfuture Cloud vApps, powered by VMware

- Serverless ComputingNo need to worry about provisioning or managing servers, switch to Serverless Computing with Cyfuture Cloud

- HPCHigh-Performance Computing

- BaremetalBare metal refers to a type of cloud computing service that provides access to dedicated physical servers, rather than virtualized servers.

-

Storage

Storage

- Standard StorageGet access to low-latency access to data and a high level of reliability with Cyfuture Cloud standard storage service

- Nearline StorageStore data at a lower cost without compromising on the level of availability with Nearline

- Coldline StorageStore infrequently used data at low cost with Cyfuture Cloud coldline storage

- Archival StorageStore data in a long-term, durable manner with Cyfuture Cloud archival storage service

-

Database

Database

- MS SQLStore and manage a wide range of applications with Cyfuture Cloud MS SQL

- MariaDBStore and manage data with the cloud with enhanced speed and reliability

- MongoDBNow, store and manage large amounts of data in the cloud with Cyfuture Cloud MongoDB

- Redis CacheStore and retrieve large amounts of data quickly with Cyfuture Cloud Redis Cache

-

Automation

Automation

-

Containers

Containers

- KubernetesNow deploy and manage your applications more efficiently and effectively with the Cyfuture Cloud Kubernetes service

- MicroservicesDesign a cloud application that is multilingual, easily scalable, easy to maintain and deploy, highly available, and minimizes failures using Cyfuture Cloud microservices

-

Operations

Operations

- Real-time Monitoring & Logging ServicesMonitor & track the performance of your applications with real-time monitoring & logging services offered by Cyfuture Cloud

- Infra-maintenance & OptimizationEnsure that your organization is functioning properly with Cyfuture Cloud

- Application Performance ServiceOptimize the performance of your applications over cloud with us

- Database Performance ServiceOptimize the performance of databases over the cloud with us

- Security Managed ServiceProtect your systems and data from security threats with us!

- Back-up As a ServiceStore and manage backups of data in the cloud with Cyfuture Cloud Backup as a Service

- Data Back-up & RestoreStore and manage backups of your data in the cloud with us

- Remote Back-upStore and manage backups in the cloud with remote backup service with Cyfuture Cloud

- Disaster RecoveryStore copies of your data and applications in the cloud and use them to recover in the event of a disaster with the disaster recovery service offered by us

-

Networking

Networking

- Load BalancerEnsure that applications deployed across cloud environments are available, secure, and responsive with an easy, modern approach to load balancing

- Virtual Data CenterNo need to build and maintain a physical data center. It’s time for the virtual data center

- Private LinkPrivate Link is a service offered by Cyfuture Cloud that enables businesses to securely connect their on-premises network to Cyfuture Cloud's network over a private network connection

- Private CircuitGain a high level of security and privacy with private circuits

- VPN GatewaySecurely connect your on-premises network to our network over the internet with VPN Gateway

- CDNGet high availability and performance by distributing the service spatially relative to end users with CDN

-

Media

-

Analytics

Analytics

-

Security

Security

-

Network Firewall

- DNATTranslate destination IP address when connecting from public IP address to a private IP address with DNAT

- SNATWith SNAT, allow traffic from a private network to go to the internet

- WAFProtect your applications from any malicious activity with Cyfuture Cloud WAF service

- DDoSSave your organization from DoSS attacks with Cyfuture Cloud

- IPS/ IDSMonitor and prevent your cloud-based network & infrastructure with IPS/ IDS service by Cyfuture Cloud

- Anti-Virus & Anti-MalwareProtect your cloud-based network & infrastructure with antivirus and antimalware services by Cyfuture Cloud

- Threat EmulationTest the effectiveness of cloud security system with Cyfuture Cloud threat emulation service

- SIEM & SOARMonitor and respond to security threats with SIEM & SOAR services offered by Cyfuture Cloud

- Multi-Factor AuthenticationNow provide an additional layer of security to prevent unauthorized users from accessing your cloud account, even when the password has been stolen!

- SSLSecure data transmission over web browsers with SSL service offered by Cyfuture Cloud

- Threat Detection/ Zero DayThreat detection and zero-day protection are security features that are offered by Cyfuture Cloud as a part of its security offerings

- Vulnerability AssesmentIdentify and analyze vulnerabilities and weaknesses with the Vulnerability Assessment service offered by Cyfuture Cloud

- Penetration TestingIdentify and analyze vulnerabilities and weaknesses with the Penetration Testing service offered by Cyfuture Cloud

- Cloud Key ManagementSecure storage, management, and use of cryptographic keys within a cloud environment with Cloud Key Management

- Cloud Security Posture Management serviceWith Cyfuture Cloud, you get continuous cloud security improvements and adaptations to reduce the chances of successful attacks

- Managed HSMProtect sensitive data and meet regulatory requirements for secure data storage and processing.

- Zero TrustEnsure complete security of network connections and devices over the cloud with Zero Trust Service

- IdentityManage and control access to their network resources and applications for your business with Identity service by Cyfuture Cloud

-

-

Compute

- Solutions

-

Solutions

Solutions

-

Cloud

Hosting

Cloud

Hosting

-

VPS

Hosting

VPS

Hosting

-

GPU Cloud

-

Dedicated

Server

Dedicated

Server

-

Server

Colocation

Server

Colocation

-

Backup as a Service

Backup as a Service

-

CDN

Network

CDN

Network

-

Window

Cloud Hosting

Window

Cloud Hosting

-

Linux

Cloud Hosting

Linux

Cloud Hosting

-

Managed Cloud Service

-

Storage as a Service

-

VMware

Public Cloud

VMware

Public Cloud

-

Multi-Cloud

Hosting

Multi-Cloud

Hosting

-

Cloud

Server Hosting

Cloud

Server Hosting

-

Bare

Metal Server

Bare

Metal Server

-

Virtual

Machine

Virtual

Machine

-

Magento

Hosting

Magento

Hosting

-

Remote Backup

-

DevOps

DevOps

-

Kubernetes

Kubernetes

-

Cloud

Storage

Cloud

Storage

-

NVMe Hosting

-

DR

as s Service

DR

as s Service

-

-

Solutions

- Marketplace

- Pricing

- Resources

- Resources

-

By Product

Use Cases

-

By Industry

- Company

-

Company

Company

-

Company

Load Balancing in Cloud Computing

Table of Contents

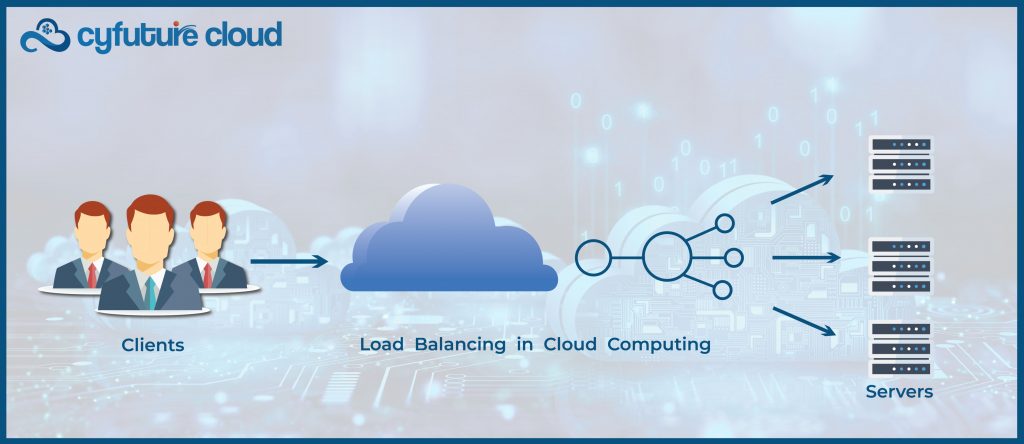

What is load balancing in cloud computing? Load Balancing in Cloud Computing is the spread of traffic and workloads to prevent any one server or computer from being under overloaded, or sitting idle. To enhance overall cloud performance, load balancing optimizes a variety of limited characteristics like execution time, response time, and system stability. A load balancer that stands between servers and client devices to control traffic makes up the load-balancing architecture used in cloud computing.

In cloud computing, load balancing distributes traffic, workloads, and computing resources equally throughout a cloud environment to increase cloud applications’ efficiency and dependability. With the use of cloud load balancing, businesses can divide host resources and client requests among a number of computers, application servers, or computer networks.

The main objective of load balancing in cloud computing is to increase organizational resources while reducing response times for application users.

Techniques for Load Balancing in Cloud Computing

In order to avoid any one server from becoming overloaded, load balancing in cloud computing manages huge workloads and distributes traffic among cloud servers. Performance is improved and downtime and latency are reduced as a result.

To reduce latency and increase server availability and dependability, advanced load balancing in cloud computing spreads traffic over several servers. Utilizing a variety of load-balancing approaches, effective cloud load-balancing implementations reduce server failure and enhance performance. Before rerouting traffic in the event of a failover, a load balancer, for instance, can assess the distance between servers or the load on those servers.

Load balancers can be networked hardware-based devices or just software-defined operations. Hardware load balancers are typically not allowed to operate in vendor-managed cloud hosting settings and are ineffective at controlling cloud traffic in any case. Because they may operate in any location and environment, software-based load balancers are more suited for cloud infrastructures and applications.

A software-defined method used in cloud computing called DNS load balancing divides client requests for a domain within the Domain Name System (DNS) among several servers. In order to ensure that DNS requests are spread equally among servers, the DNS system provides a distinct version of the list of IP addresses with each response to a new client request. DNS load balancing enables automatic failover or backup and automatically removes unresponsive servers.

In the way it manages traffic to prevent congestion, load balancing in cloud computing is similar to a police officer. Yes, the officer probably uses simple, static strategies like counting automobiles or calculating the number of seconds of traffic that can pass at once, but they can also use dynamic techniques to change their pace in response to the ebb and flow of traffic. Similar principles govern how load balancing in cloud computing prevents lost income and a subpar user experience brought on by overloaded servers and applications.

Load Balancing in Cloud Computing: How to Use

In cloud computing, there are many distinct types of load balancing algorithms, some more well-liked than others. They differ in how they manage and distribute network load as well as how they choose which servers should service client requests. In cloud computing, the following are the top eight load-balancing algorithms:

1. Round Robin

This algorithm is known to forward incoming requests to each and every server in a very simple and repetitive cycle. The standard round-robin algorithm is known to be amongst the most common load balancing in cloud computing. It is also considered to be the basic technique for implementation. But, it also is known to assume equal capacity on the part of the servers- not being the most efficient ones.

2. Least Connections

This method is used for an ideal scenario during the period of heavy traffic. Least connection diverts the fewest active connected servers to an evenly distributed traffic midst the available server.

3. IP Hash

With this simple load balancing technique, requests are distributed according to IP address. With the help of a special hash key it creates, the load balancing algorithm used in this method assigns client requests to servers. The source, destination, and IP address are all encrypted and used as the hash keys.

4. Least Respeonse Time

The least response time dynamic strategy is similar to least connections in that it routes traffic to the server with the lowest average response time and the fewest active connections.

5. Least Bandwidth

The least bandwidth approach, another form of dynamic load balancing in cloud computing, routes client requests to the server that used the least bandwidth most recently.

6. Layer 4 Load Balancer

Thye are known to route traffic pakctes based on the IP addresses of their destinations along with the UDP / TCP ports which they use. Under theprocess of Network Address Translation or NAT, L4 load balancers map the IP Addresses to the correct server rather than inpspectingg the actual packet content.

7. Load balancers at Layer 7

L7 load balancers operate at the application layer of the OSI model and examine HTTP headers, SSL session IDs, and other information to decide how to route requests to servers. Because they need more context to route requests to servers, L7 load balancers are both more effective and computationally intensive than L4 load balancers.

8. Balanced server loads globally

Global Server Load Balancing (GSLB) allows L4 and L7 load balancers to distribute enormous volumes of traffic more effectively while maintaining performance across data centers. The management of regionally dispersed application requests benefits greatly from GSLB.

What does a load balancer in cloud computing as a service entail?

In place of on-premises, specialised traffic routing appliances that require in-house configuration and maintenance, several cloud providers offer load balancing as a service (LBaaS) to customers that employ these services on an as-needed basis. LBaaS is one of the more well-liked varieties of load balancing used in cloud computing. It balances workloads much like traditional load balancing does.

Instead of distributing traffic among a group of servers within a single data centre, LBaaS balances workloads across servers in a cloud environment and operates itself as a subscription or on-demand service there.

Some advantages of LBaaS are:

| Scalability | Load balancing services can be quickly and easily scaled to handle traffic spikes without the need to manually configure extra physical equipment. |

| Availability | To reduce latency and ensure high availability even when a server is offline, connect to the server that is nearest to you geographically. |

| Cost savings | Compared to hardware-based appliances, LBaaS is often less expensive in terms of money, time, effort, and internal resources for both the original investment and maintenance. |

Aspects of cloud server load balancing

| Aspects | Description |

|---|---|

| Definition | Process of distributing incoming network traffic across multiple servers or resources in a cloud. |

| Objective | Ensures even resource utilization, prevents overloads, and enhances performance of cloud services. |

| Types | 1. Layer 4 Load Balancing: Distributes traffic based on network and transport layer data. 2. Layer 7 Load Balancing: Utilizes application-specific data for traffic distribution. |

| Algorithms | Common algorithms include Round Robin, Least Connections, Weighted Round Robin, and IP Hashing. |

| Health Checks | Regular monitoring of server health and availability to route traffic only to functioning servers. |

| Benefits | 1. Enhances reliability and availability. 2. Optimizes resource usage. 3. Improves performance and response times. |

| Load Balancer Types | Hardware-based, software-based, and cloud-based load balancers offering varying scalability and features. |

Take Away:

When should you use load balancing in cloud computing? If this is still your question, contact compatible experts at Cyfuture Cloud and understand that high-performance cloud computing environments need load balancer

In conclusion, load balancing is an indispensable aspect of cloud computing infrastructure, ensuring optimal performance, reliability, and scalability of applications and services. By intelligently distributing incoming network traffic across multiple servers or resources, load balancing mitigates the risk of bottlenecks and enhances overall system efficiency.

This technique plays a crucial role in maintaining consistent performance levels, even during peak usage periods, thereby improving user experience and maximizing resource utilization. As cloud computing continues to evolve and scale, load balancing remains a foundational element, enabling businesses to meet the demands of modern digital environments effectively.

Recent Post

Stay Ahead of the Curve.

Join the Cloud Movement, today!

© Cyfuture, All rights reserved.

Send this to a friend

Pricing

Calculator

Pricing

Calculator

Power

Power

Utilities

Utilities VMware

Private Cloud

VMware

Private Cloud VMware

on AWS

VMware

on AWS VMware

on Azure

VMware

on Azure Service

Level Agreement

Service

Level Agreement