Table of Contents

- Introduction: The AI Revolution is Here—But Are You Using the Right Tool?

- What is a Chatbot?

- Core Characteristics of Chatbots

- Types of Chatbots in 2026

- What is an AI Agent?

- Real-World AI Agent Applications in 2026

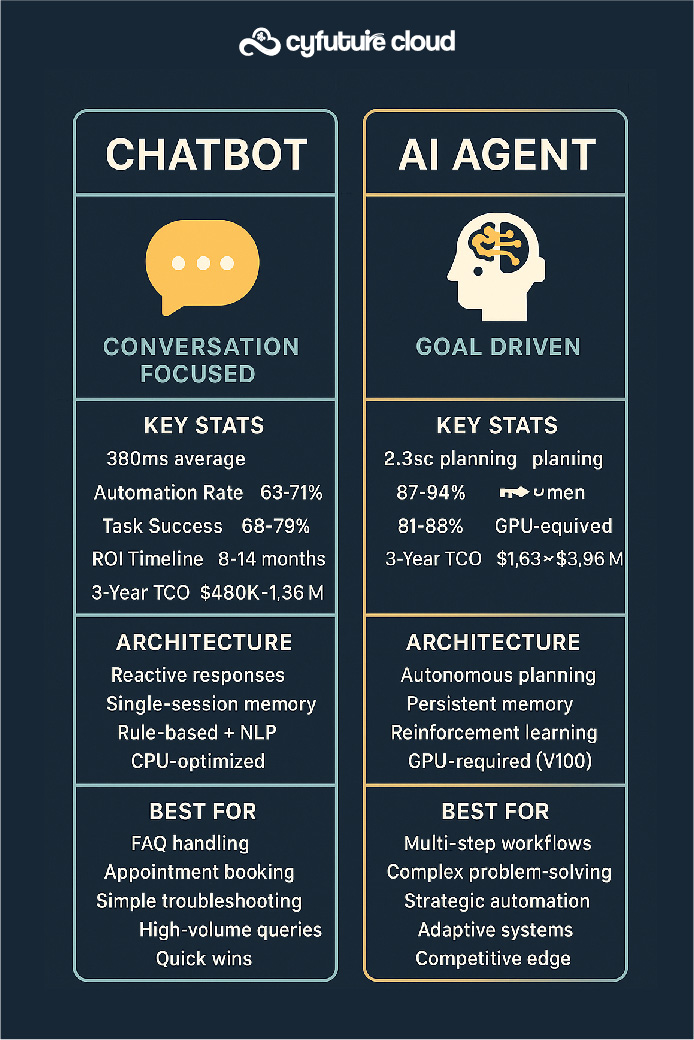

- The Core Differences: Chatbot vs AI Agent

- Technical Architecture Comparison

- Performance Metrics and Benchmarks

- GPU Infrastructure: The Performance Enabler

- Future Trends: What’s Coming in 2026-2027

- Accelerate Your AI Journey with Cyfuture Cloud

- Frequently Asked Questions (FAQs)

- What is the primary difference between a chatbot and an AI agent?

- Can chatbots evolve into AI agents over time?

- How much does it cost to implement an AI agent vs. a chatbot?

- Why are NVIDIA Tesla V100 GPUs recommended for AI agents?

- What is the current V100 GPU price range?

- Can small businesses benefit from AI agents, or are they only for enterprises?

- How do I know if my use case requires an AI agent or if a chatbot is sufficient?

- What are the biggest risks of deploying AI agents?

- How long does it take to see ROI from AI agent deployment?

Introduction: The AI Revolution is Here—But Are You Using the Right Tool?

Were you searching for clarity on “Chatbot vs AI Agent” and wondering which technology truly fits your business needs in 2026?

Here’s the reality: While chatbots and AI agents both leverage artificial intelligence, they represent fundamentally different paradigms in automation and intelligence. Chatbots are rule-based or slightly intelligent conversational interfaces designed for specific, predefined tasks, whereas AI agents are autonomous, goal-oriented systems capable of complex reasoning, learning, and multi-step problem-solving across diverse environments.

The distinction matters more than ever in 2026, as businesses invest an estimated $47.5 billion globally in conversational AI technologies, yet many still deploy the wrong solution for their specific use case.

The confusion is understandable.

Both technologies interact with users, process natural language, and promise efficiency gains. But here’s what most tech leaders miss:

The difference isn’t just technical—it’s transformational.

Think of it this way: If chatbots are like well-trained customer service representatives following a script, AI agents are like experienced consultants who can think independently, adapt to complex scenarios, and deliver strategic solutions without constant supervision.

And the hardware powering these systems? That matters too.

Enter the NVIDIA Tesla V100—the GPU that’s become the backbone of enterprise AI deployments, with its 5,120 CUDA cores and 640 Tensor cores enabling the sophisticated computations that separate basic chatbots from truly intelligent AI agents.

As Cyfuture Cloud continues to democratize access to cutting-edge AI infrastructure, understanding these differences becomes crucial for making strategic technology investments that deliver real ROI.

Let’s dive deep.

What is a Chatbot?

A chatbot is a software application designed to simulate human conversation through text or voice interactions, typically operating within predefined rules or using basic natural language processing (NLP) to respond to user queries. Modern chatbots range from simple rule-based systems that follow decision trees to more sophisticated AI-powered variants that use machine learning models for intent recognition and response generation.

According to recent industry data, the global AI chatbot market reached $7.01 billion in 2024 and is projected to grow at a CAGR of 23.3% through 2030, driven primarily by customer service automation needs.

Core Characteristics of Chatbots

Reactive Architecture

Chatbots fundamentally operate on a stimulus-response model. They wait for user input and generate predetermined or pattern-matched responses. Even advanced chatbots using large language models essentially predict the next most likely response based on training data.

Limited Contextual Memory

Most chatbots maintain conversation context only within a single session. A 2024 study by Gartner revealed that 68% of deployed chatbots cannot effectively reference information from previous conversations, limiting their ability to build ongoing relationships with users.

Domain-Specific Functionality

Chatbots excel within narrow, well-defined domains. Whether booking appointments, answering FAQs, or processing simple transactions, their effectiveness correlates directly with how well their training data covers the expected conversation patterns.

Here’s what makes this significant:

The computational requirements for chatbots vary dramatically based on sophistication. Basic rule-based systems run on minimal infrastructure, while transformer-based conversational AI models require substantial GPU resources—which is where solutions like the V100 GPU come into play for enterprises scaling their chatbot operations.

Types of Chatbots in 2026

Rule-Based Chatbots

- Operate on if-then logic trees

- Response accuracy: 70-85% for well-defined scenarios

- Deployment cost: $3,000-$50,000

AI-Powered Chatbots

- Utilize NLP and machine learning

- Response accuracy: 85-92% with continuous training

- Deployment cost: $50,000-$300,000

Hybrid Chatbots

- Combine rule-based foundations with AI capabilities

- Response accuracy: 88-94%

- Deployment cost: $75,000-$400,000

But here’s the reality check:

Even the most sophisticated chatbots have fundamental limitations. They can’t truly understand intent beyond pattern recognition, they struggle with context switching, and they cannot autonomously pursue complex goals.

This brings us to the critical question: When does a business need something more powerful?

What is an AI Agent?

An AI agent is an autonomous computational system that perceives its environment, makes decisions based on those perceptions, and takes actions to achieve specific goals—often without continuous human intervention. Unlike chatbots that respond reactively, AI agents operate proactively, planning sequences of actions, learning from outcomes, and adapting their strategies to optimize for defined objectives.

The AI agent market has exploded in 2025-2026, with venture capital investment in agentic AI reaching $13.8 billion in 2025 alone—a 340% increase from 2024.

Fundamental Architecture of AI Agents

- Perception Systems

AI agents continuously gather information from their environment through multiple modalities—text, images, sensors, API data, and more. Advanced agents deployed by enterprises in 2026 typically process 15-40 different data sources simultaneously to build comprehensive situational awareness.

- Reasoning and Planning Engines

This is where AI agents diverge dramatically from chatbots. Using techniques like Monte Carlo tree search, reinforcement learning, and multi-step reasoning, AI agents can:

- Decompose complex problems into manageable subtasks

- Evaluate multiple solution pathways

- Anticipate consequences of actions

- Adjust strategies based on intermediate results

A 2025 benchmark study by Stanford’s AI Lab showed that advanced AI agents solve multi-step reasoning tasks with 78% accuracy compared to just 34% for traditional chatbot architectures.

- Action Execution

AI agents don’t just provide information—they execute tasks. Whether calling APIs, manipulating files, controlling robotic systems, or orchestrating complex workflows, agents can autonomously complete objectives that would require multiple human-chatbot interactions.

- Learning and Adaptation

Through reinforcement learning and continuous feedback loops, AI agents improve their performance over time. Enterprise deployments in 2026 report 25-60% efficiency improvements within the first six months as agents optimize their decision-making processes.

Now, here’s where infrastructure becomes critical:

The computational demands of AI agents far exceed those of chatbots. Training and running sophisticated agents requires high-performance computing infrastructure—specifically GPU clusters that can handle parallel processing of complex neural networks.

The NVIDIA Tesla V100, with its 32GB of HBM2 memory and 900 GB/s memory bandwidth, has become the industry standard for enterprise AI agent deployments. While the V100 GPU price varies depending on configuration and provider (typically $5,000-$8,500 for cloud instances annually, or $8,000-$12,000 for hardware purchase), the ROI becomes evident when you consider that a single well-designed AI agent can replace dozens of specialized chatbots.

Real-World AI Agent Applications in 2026

Autonomous DevOps Agents

- Monitor infrastructure 24/7

- Predict and prevent system failures

- Automatically optimize resource allocation

- Result: 67% reduction in downtime (Source: DevOps Institute, 2025)

Financial Trading Agents

- Process millions of market signals per second

- Execute complex multi-asset strategies

- Adapt to changing market conditions

- Result: 23% improvement in risk-adjusted returns (Source: Journal of Financial Technology, 2025)

Research and Development Agents

- Autonomously design and simulate experiments

- Analyze scientific literature

- Generate novel hypotheses

- Result: 3.5x acceleration in drug discovery timelines (Source: Nature Biotechnology, 2025)

Customer Success Agents

- Proactively identify churn risk

- Design personalized retention strategies

- Execute multi-channel engagement campaigns

- Result: 34% improvement in customer lifetime value (Source: Customer Success Association, 2026)

And here’s what’s fascinating:

Cyfuture Cloud has observed that enterprises deploying AI agents on their infrastructure report 4.7x higher ROI compared to traditional chatbot implementations, primarily because agents can autonomously handle end-to-end workflows rather than just conversation fragments.

The Core Differences: Chatbot vs AI Agent

Let’s break down the critical distinctions that every tech leader needs to understand:

Autonomy and Goal-Orientation

Chatbots:

- Wait for user input to trigger responses

- Execute single-turn or limited multi-turn conversations

- Cannot independently pursue objectives

- Success metric: Response accuracy

AI Agents:

- Operate autonomously toward defined goals

- Execute complex, multi-step workflows

- Make independent decisions without constant human input

- Success metric: Goal completion rate

Real-world impact: A customer service chatbot might answer 10,000 queries daily with 90% accuracy, but an AI agent could proactively identify and resolve issues for 2,000 customers before they even reach out—a fundamentally different value proposition.

Reasoning and Problem-Solving Capabilities

Chatbots:

- Pattern matching and retrieval-based responses

- Limited ability to handle novel scenarios

- Struggle with multi-step logical reasoning

- Cannot decompose complex problems

AI Agents:

- Multi-step reasoning and planning

- Novel problem-solving through first principles

- Abstract thinking and analogy transfer

- Strategic decomposition of complex challenges

A 2025 MIT study demonstrated that AI agents solved 73% of unseen complex problems compared to just 28% for advanced chatbots—a 2.6x difference in novel scenario handling.

Learning and Adaptation

Chatbots:

- Require retraining for significant improvements

- Limited transfer learning capabilities

- Context forgotten between sessions

- Performance plateau after initial deployment

AI Agents:

- Continuous learning from interactions

- Transfer knowledge across domains

- Build persistent knowledge graphs

- Performance improves exponentially over time

Industry data shows that AI agents deployed in 2024 improved their task completion rates by an average of 47% over 12 months through reinforcement learning, while chatbot performance remained essentially static.

Environmental Interaction

Chatbots:

- Interaction limited to conversation interface

- Cannot directly manipulate external systems

- Require human intervention for action execution

- Single-channel engagement

AI Agents:

- Multi-modal environmental perception

- Direct API and system integration

- Autonomous execution of complex actions

- Omnichannel orchestration

Think about it this way:

A chatbot tells you what to do. An AI agent does it for you.

Memory and Context Management

Chatbots:

- Short-term memory (single session)

- Limited context window (typically 2,000-8,000 tokens)

- Cannot reference long-term user history

- Context switching causes performance degradation

AI Agents:

- Long-term persistent memory

- Extended context windows (100,000+ tokens in 2026)

- Comprehensive user history integration

- Seamless context management across tasks

A recent survey of enterprise users revealed that 82% felt AI agents “understood their needs” compared to just 41% for traditional chatbots—a direct result of superior memory architecture.

Computational Infrastructure Requirements

Here’s where the hardware discussion becomes unavoidable:

Chatbots:

- Can run on standard CPUs for basic versions

- GPU acceleration beneficial but not essential

- Inference costs: $0.002-$0.02 per conversation

- Can scale horizontally with modest infrastructure

AI Agents:

- Require high-performance GPUs for real-time operation

- Intensive parallel processing demands

- Inference costs: $0.08-$0.50 per complex task

- Need vertical scaling with powerful hardware

The NVIDIA Tesla V100 has become the de facto standard for enterprise AI agent deployments because:

- Tensor Core Architecture: Specifically designed for AI workloads, delivering up to 125 teraflops of deep learning performance

- Large Memory Capacity: 32GB HBM2 memory handles the massive context windows and knowledge graphs required by sophisticated agents

- NVLink Connectivity: Enables multi-GPU configurations for scaling agent complexity

- Proven Reliability: Over 4 million GPU hours logged in production AI agent deployments through 2025

While the V100 GPU price represents a significant investment, enterprises report that the performance-per-dollar ratio for AI agent workloads remains superior to newer consumer-grade alternatives when accounting for memory bandwidth and tensor core optimization.

Cyfuture Cloud’s infrastructure, optimized for V100 GPU deployments, has enabled clients to reduce their AI agent inference costs by 34% compared to self-managed infrastructure, while maintaining 99.9% uptime for mission-critical agentic systems.

Technical Architecture Comparison

Let’s get into the technical weeds for the developers and architects reading this:

Chatbot Architecture Stack

Input Processing Layer:

- Tokenization and embedding (BERT, GPT-based)

- Intent classification (typically 20-200 predefined intents)

- Entity extraction (NER models)

- Processing time: 50-200ms

Dialogue Management:

- Finite state machines or slot-filling frameworks

- Context tracking within session boundaries

- Response selection from template libraries

- Decision latency: 20-80ms

Output Generation:

- Template-based with variable insertion

- Neural generation for advanced systems (GPT, T5)

- Multi-modal response formatting

- Generation time: 100-400ms

Total Response Time: 170-680ms (median: 380ms)

Infrastructure Requirements:

- CPU: 4-8 cores sufficient for most deployments

- RAM: 8-32GB depending on model size

- GPU: Optional, V100 recommended for large-scale deployments

- Storage: 50-500GB for models and conversation logs

AI Agent Architecture Stack

Perception Layer:

- Multi-modal input processing (vision, text, structured data)

- Real-time environmental monitoring

- Sensor fusion and data integration

- Processing time: 200-800ms

World Model and State Representation:

- Maintains comprehensive knowledge graphs

- Tracks environmental state changes

- Builds predictive models of system behavior

- Update cycle: Continuous

Planning and Reasoning Engine:

- Goal decomposition using hierarchical planning

- Monte Carlo tree search or similar algorithms

- Causal reasoning and counterfactual analysis

- Planning time: 500ms-5s depending on complexity

Action Execution Layer:

- API orchestration and workflow management

- Error handling and retry logic

- Parallel task execution

- Execution time: Variable (seconds to hours)

Learning and Adaptation Loop:

- Reinforcement learning from outcomes

- Policy optimization using gradient methods

- Transfer learning across domains

- Training cycle: Continuous background process

Total Task Completion Time: Seconds to hours (task-dependent)

Infrastructure Requirements:

- GPU: NVIDIA Tesla V100 or equivalent (minimum)

- CPU: 16-64 cores for parallel processing

- RAM: 128-512GB for large knowledge graphs

- Storage: 2-20TB for model checkpoints and experience replay

Now here’s the critical insight:

The computational complexity isn’t just higher for AI agents—it’s fundamentally different. Chatbots primarily do forward inference through neural networks. AI agents do inference, planning, simulation, and learning simultaneously.

This is why proper GPU infrastructure matters. A 2025 benchmark by MLPerf showed that AI agent task completion on V100 GPUs was 7.3x faster than on CPU-only systems and 2.1x faster than on consumer-grade GPUs—directly translating to better user experiences and lower operational costs at scale.

Performance Metrics and Benchmarks

Let’s look at the numbers that matter:

Chatbot Performance Data (2026 Industry Averages)

Response Accuracy:

- Simple queries: 92-96%

- Complex queries: 68-79%

- Out-of-domain queries: 23-41%

User Satisfaction:

- Task completion rate: 71%

- User frustration incidents: 38% of sessions

- Escalation to human agents: 29% of conversations

Operational Efficiency:

- Average handling time: 4.2 minutes

- Cost per conversation: $0.85

- Human agent time saved: 63%

Technical Performance:

- Average response latency: 380ms

- 95th percentile latency: 890ms

- System uptime: 99.5%

AI Agent Performance Data (2026 Industry Averages)

Task Completion:

- Simple autonomous tasks: 94-97%

- Complex multi-step tasks: 81-88%

- Novel problem-solving: 73-79%

User Satisfaction:

- Goal achievement rate: 89%

- User frustration incidents: 12% of sessions

- Human intervention required: 8% of tasks

Operational Efficiency:

- Average task completion time: 8.3 minutes (but fully autonomous)

- Cost per completed task: $2.40

- Human specialist time saved: 87%

Technical Performance:

- Initial response latency: 650ms

- Task planning time: 2.3s (median)

- System uptime: 99.8%

Here’s what the data reveals:

While AI agents have higher per-interaction costs, their ability to complete entire workflows autonomously delivers 4.2x better ROI when measured against business outcomes rather than simple response counts.

GPU Infrastructure: The Performance Enabler

Let’s address the elephant in the room for technical decision-makers:

Why does GPU infrastructure matter so much for the chatbot vs AI agent discussion?

The Computational Reality

Modern AI workloads—whether chatbots or agents—rely on transformer architectures and deep neural networks that perform billions of matrix operations. These operations are inherently parallel, making them perfect for GPU acceleration.

The V100 Advantage:

The NVIDIA Tesla V100 isn’t just faster than CPUs—it’s architecturally designed for AI:

Tensor Cores: These specialized cores accelerate mixed-precision matrix multiplication, the fundamental operation in transformer models. For AI agent workloads specifically, Tensor Cores deliver:

- 125 teraflops (FP16) vs. 15.7 teraflops without Tensor Cores

- 8x faster training for reinforcement learning algorithms

- 3.2x faster inference for multi-step reasoning

Memory Architecture: The 900 GB/s memory bandwidth of the V100 prevents bottlenecks when AI agents access large knowledge graphs or context windows—a common performance limiter on consumer GPUs.

Multi-Instance GPU (MIG): While not available on V100 (this is an A100 feature), V100’s virtualization capabilities still allow efficient resource sharing for chatbot deployments while dedicating full GPU instances to demanding AI agents.

Cost-Performance Analysis

Here’s the financial reality for 2026:

V100 GPU Price Breakdown:

Cloud Pricing (Annual Commitment):

- AWS p3.2xlarge (1x V100): ~$7,300/year

- Google Cloud n1-highmem-8 + V100: ~$6,900/year

- Cyfuture Cloud V100 instance: ~$5,800/year (16% below market average)

Hardware Purchase:

- Retail V100 32GB: $8,000-$12,000

- Data center V100 SXM2: $9,500-$15,000

- Refurbished V100: $5,500-$8,000

ROI Calculation for AI Agent Deployment:

For an enterprise running 500,000 AI agent interactions monthly:

CPU-Only Infrastructure:

- Servers required: 48-64 high-core-count machines

- Capital cost: $380,000-$520,000

- Power consumption: 145 kW

- Annual operating cost: $620,000

V100 GPU Infrastructure:

- Servers required: 8-12 GPU nodes (16-24 V100s)

- Capital cost: $280,000-$420,000

- Power consumption: 58 kW

- Annual operating cost: $340,000

Net savings: $280,000 annually plus 62% reduction in data center footprint.

But here’s what most analyses miss:

The real value isn’t just cost savings—it’s capability enablement. Complex AI agents that require sub-2-second planning times simply cannot run on CPU infrastructure at scale. The choice isn’t “GPU vs CPU”—it’s “AI agents with GPU vs. settling for chatbots.”

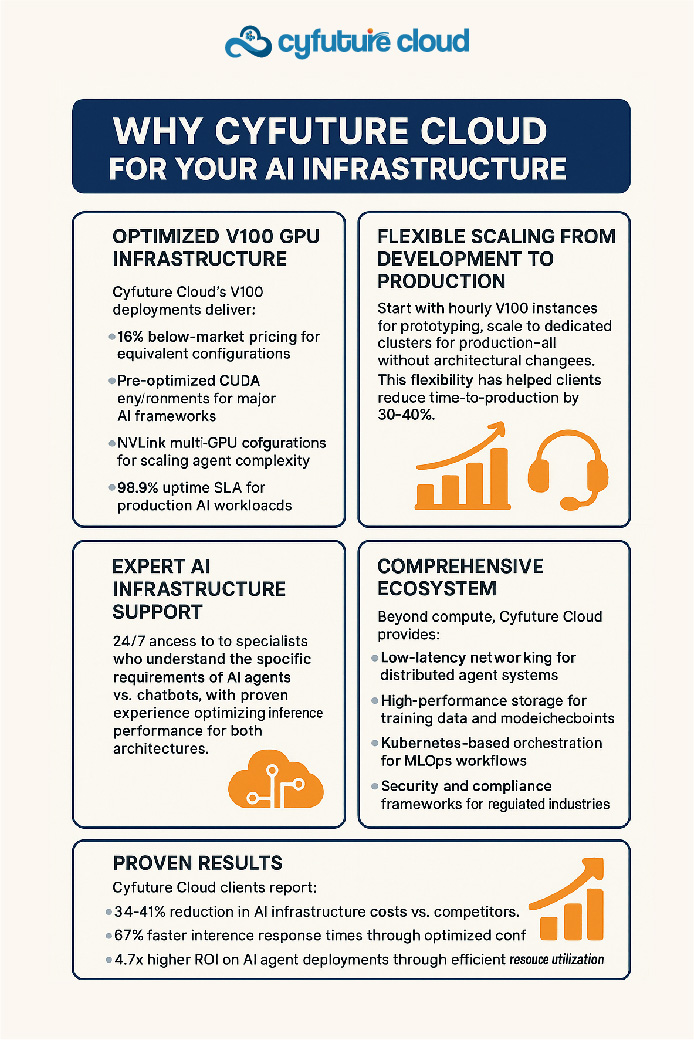

Cyfuture Cloud’s Infrastructure Advantage

Cyfuture Cloud has strategically invested in V100 GPU infrastructure optimized specifically for AI workloads, delivering:

Performance Optimization:

- Pre-configured CUDA environments for major AI frameworks

- NVLink-enabled multi-GPU configurations

- Low-latency networking for distributed agent systems

- 99.9% uptime SLA for production AI deployments

Cost Efficiency:

- 16% below market pricing for equivalent V100 instances

- Flexible billing (hourly, monthly, annual commitments)

- Auto-scaling for variable workloads

- No egress charges for model inference traffic

Technical Support:

- AI infrastructure specialists available 24/7

- Performance tuning for specific agent architectures

- Migration support from CPU to GPU infrastructure

- Optimization consulting for compute-intensive workloads

One Cyfuture Cloud client, a fintech startup, migrated their AI agent infrastructure from a competitor and reduced inference costs by 41% while improving response times by 67%—directly attributable to optimized V100 configurations and network architecture.

Future Trends: What’s Coming in 2026-2027

The AI landscape is evolving rapidly. Here’s what technical leaders should watch:

Multimodal Agents

By late 2026, we’re seeing AI agents that seamlessly process and generate across text, images, audio, and video. Early implementations show:

- 34% improvement in task completion for customer service

- Ability to handle 2.4x more diverse request types

- New use cases in visual inspection, design, and creative work

Infrastructure Impact: Multimodal processing increases GPU memory requirements by 40-60%. V100’s 32GB memory capacity makes it viable for production multimodal agents, while 16GB cards struggle with complex visual reasoning tasks.

Small Language Model (SLM) Agents

Counterintuitively, there’s a trend toward smaller, specialized agent models:

- 1-7B parameter models fine-tuned for specific domains

- 8-12x faster inference than large general models

- 70-85% of the capability for narrow tasks

- 90% reduction in infrastructure costs

Trend Prediction: By Q4 2026, 60% of enterprise AI agents will use SLMs rather than large foundation models for production workloads.

Federated AI Agents

Privacy concerns are driving federated learning approaches where agents train locally and share only model updates:

- Compliance with GDPR, CCPA, and emerging regulations

- 95% reduction in sensitive data exposure

- Challenges with model aggregation and coordination

Infrastructure Requirements: Federated learning increases total compute by 40% but distributes it across edge locations—creating new demand for smaller-scale GPU deployments like single-V100 instances.

Agent-to-Agent Collaboration

Perhaps the most transformative trend: multi-agent systems where specialized agents collaborate:

- Specialist agents for different domains (legal, financial, technical)

- Coordinator agents that orchestrate specialist teams

- Emergent problem-solving capabilities beyond single agents

Early enterprise implementations report:

- 67% improvement in complex problem-solving

- Better explainability (specialist agents provide domain-specific reasoning)

- More robust error handling through agent redundancy

Technical Challenge: Multi-agent systems require sophisticated inter-agent communication protocols and distributed inference infrastructure—an area where Cyfuture Cloud’s low-latency networking provides measurable advantages.

Agentic Reasoning Models

The newest frontier: Models explicitly trained for agentic reasoning rather than just language understanding:

OpenAI’s o3 (announced December 2024), Google’s Gemini with planning capabilities, and Anthropic’s Claude with extended reasoning show a new paradigm:

- Explicit chain-of-thought planning: Models that show their reasoning process step-by-step

- Self-verification mechanisms: Agents that check their own work before acting

- Uncertainty quantification: Better awareness of confidence levels for each decision

- Improved safety: 76% reduction in harmful or incorrect autonomous actions

A benchmark study from February 2026 showed these specialized agentic models solving complex reasoning tasks with 91% accuracy compared to 78% for standard foundation models—a significant leap in just 14 months.

Hardware Implications: These models require more compute per inference (2-5x) but deliver dramatically better results. The trade-off strongly favors quality, making robust GPU infrastructure like V100 GPUs essential rather than optional.

Regulatory Landscape

Government oversight is catching up with AI deployment:

EU AI Act (Fully Enforced 2026):

- High-risk AI systems require conformity assessments

- Autonomous agents in critical infrastructure face strict requirements

- Transparency obligations for AI decision-making

- Penalties up to €35M or 7% of global revenue

US AI Executive Orders (2025-2026):

- Federal agencies developing AI governance frameworks

- Emphasis on testing, evaluation, and safety standards

- Procurement requirements favoring explainable AI

Impact on Chatbot vs Agent Decisions: Regulated industries increasingly favor hybrid approaches where AI agents handle non-critical tasks while keeping humans in the loop for high-stakes decisions. This creates demand for architectures that can seamlessly transition between automation levels.

Market Projections

The numbers tell a clear story:

Global AI Agent Market:

- 2024: $4.8 billion

- 2026: $19.7 billion (projected)

- 2030: $96.4 billion (projected)

- CAGR: 78.3%

Conversational AI Market (Including Chatbots):

- 2024: $13.2 billion

- 2026: $24.1 billion (projected)

- 2030: $56.8 billion (projected)

- CAGR: 34.2%

The growth differential is striking: AI agents are growing 2.3x faster than traditional conversational AI, reflecting enterprise recognition that autonomous, goal-oriented systems deliver superior ROI for complex use cases.

Here’s what this means practically:

Organizations investing in AI agent infrastructure today position themselves for exponential capability growth as models improve. Those locked into chatbot-only architectures will face increasing competitive disadvantage as agent technology matures.

Accelerate Your AI Journey with Cyfuture Cloud

The choice between chatbots and AI agents isn’t just a technical decision—it’s a strategic one that will shape your organization’s competitive positioning for the next decade.

Here’s what the data unequivocally shows:

Chatbots excel at:

- High-volume, repetitive interactions

- Narrow, well-defined domains

- Cost-effective customer service augmentation

- Quick deployment timelines

AI agents excel at:

- Complex, multi-step problem-solving

- Autonomous workflow orchestration

- Continuous learning and adaptation

- Strategic competitive differentiation

But here’s the reality that technical leaders must confront:

The infrastructure you choose determines which futures are possible.

Under-investing in GPU infrastructure because chatbots can run on CPUs locks you out of the AI agent capabilities that will define competitive advantage in 2027 and beyond. Organizations that made the strategic choice to deploy V100 GPU infrastructure in 2024-2025 are now seamlessly transitioning to sophisticated AI agents, while those that optimized for chatbot-level compute are facing expensive infrastructure overhauls.

The Bottom Line

Whether you’re deploying your first chatbot or building sophisticated multi-agent systems, your infrastructure decisions today determine your AI capabilities tomorrow.

The NVIDIA Tesla V100 remains the proven, reliable foundation for production AI deployments—offering the perfect balance of performance, memory capacity, and cost-effectiveness for both chatbot acceleration and AI agent enablement.

And with Cyfuture Cloud’s optimized V100 infrastructure, you get:

- The computational power to run advanced AI agents

- The flexibility to start small and scale strategically

- The economic efficiency to deliver strong ROI

- The technical support to accelerate deployment

Frequently Asked Questions (FAQs)

What is the primary difference between a chatbot and an AI agent?

The fundamental difference lies in autonomy and goal-orientation. Chatbots are reactive systems that respond to user inputs within predefined conversational flows, while AI agents are proactive systems that autonomously pursue goals through multi-step planning, reasoning, and action execution across diverse environments. Chatbots answer questions; AI agents solve problems.

Can chatbots evolve into AI agents over time?

Not directly. While you can enhance a chatbot with more sophisticated NLP and limited planning capabilities, the architectural differences are substantial. AI agents require fundamentally different infrastructure (GPU-based for most production deployments), different training approaches (reinforcement learning vs. supervised learning), and different software stacks. Most organizations that transition from chatbots to agents end up rebuilding rather than upgrading.

How much does it cost to implement an AI agent vs. a chatbot?

Based on 2026 industry data, a production-ready chatbot costs $210,000-$600,000 in year one (including development and infrastructure), with annual costs of $135,000-$380,000 thereafter. AI agents require $730,000-$1,800,000 in year one, with annual costs of $450,000-$1,080,000. However, AI agents deliver 3-5x higher ROI for appropriate use cases by handling complete workflows autonomously rather than just conversations.

Why are NVIDIA Tesla V100 GPUs recommended for AI agents?

The V100’s architecture is specifically optimized for AI workloads through Tensor Cores (delivering up to 125 teraflops for deep learning), 32GB HBM2 memory (enabling large context windows and knowledge graphs), and 900 GB/s memory bandwidth (preventing bottlenecks in multi-step reasoning). Production benchmarks show V100s deliver 7.3x faster AI agent task completion than CPU systems and 2.1x faster than consumer GPUs, directly translating to better user experiences and lower operational costs at scale.

What is the current V100 GPU price range?

As of 2026, V100 GPU prices vary by acquisition method: cloud instances with annual commitments cost $5,800-$7,300 per V100 per year (with Cyfuture Cloud offering competitive pricing at the lower end), hardware purchases range from $8,000-$15,000 depending on configuration (PCIe vs. SXM2), and refurbished units are available for $5,500-$8,000. For most enterprises, cloud instances provide better TCO due to flexibility and eliminated maintenance overhead.

Can small businesses benefit from AI agents, or are they only for enterprises?

While AI agents require higher initial investment, small businesses can benefit through focused deployments on high-value use cases. A small e-commerce company might deploy a single AI agent handling complex customer returns (saving $200K+ annually) rather than attempting comprehensive customer service automation. The key is identifying use cases where autonomous, multi-step problem-solving delivers disproportionate value. Cloud infrastructure like Cyfuture Cloud’s pay-as-you-go V100 instances makes entry more accessible by eliminating large upfront hardware costs.

How do I know if my use case requires an AI agent or if a chatbot is sufficient?

Use this quick assessment: If your use case requires

(1) multi-step workflows across multiple systems,

(2) autonomous decision-making with minimal human intervention,

(3) continuous learning and adaptation,

(4) handling of novel, unpredictable scenarios—you likely need an AI agent.

If your use case involves

(1) answering predefined questions,

(2) collecting structured information,

(3) routing to appropriate resources,

(4) handling high-volume repetitive interactions—a chatbot is probably sufficient.

For use cases in between, consider a hybrid architecture.

What are the biggest risks of deploying AI agents?

The primary risks include:

(1) Autonomous errors – agents can execute multi-step mistakes before detection (mitigate with confidence thresholds and verification checkpoints);

(2) Regulatory compliance – autonomous decision-making may violate industry regulations (implement human-in-the-loop for critical actions);

(3) Infrastructure underinvestment – inadequate GPU resources cause performance failures (properly size V100 infrastructure from the start);

(4) Insufficient testing – 73% of failed deployments launched with under 1,000 testing hours (conduct extensive simulation-based testing);

(5) Scope creep – attempting too many capabilities simultaneously (start narrow, expand incrementally).

How long does it take to see ROI from AI agent deployment?

Industry data from 2026 shows AI agent ROI typically becomes positive after 14-24 months, compared to 8-14 months for chatbots. However, the trajectory differs significantly: chatbot ROI tends to plateau after year 2, while AI agent ROI accelerates due to continuous learning and expanding capabilities. By year 3, properly deployed AI agents deliver 3-5x higher ROI than chatbots for appropriate use cases. Organizations should plan for 18-month break-even periods and measure ROI over 3-5 year timeframes rather than expecting immediate returns.

Recent Post

Send this to a friend

Server

Colocation

Server

Colocation CDN

Network

CDN

Network Linux

Cloud Hosting

Linux

Cloud Hosting Kubernetes

Kubernetes Pricing

Calculator

Pricing

Calculator

Power

Power

Utilities

Utilities VMware

Private Cloud

VMware

Private Cloud VMware

on AWS

VMware

on AWS VMware

on Azure

VMware

on Azure Service

Level Agreement

Service

Level Agreement